European researchers are assembling next-generation machines that are more aware of their surroundings and communicate better with humans

While building robots with human-like intelligence is an impossible task with today's technology, it is possible to build simpler robots that are sophisticated enough to be responsible for their actions.

Nowadays, the use of machines and robots is widespread mainly in the industrial sector, but soon they will also enter the service sector. When the technology matures, there will be no end to the possible uses for machines of this type, so for example it will be possible to purchase a personal robot for the home that will serve as a cleaner, as a caregiver, as a babysitter, and also as a pet - all at the same time.

Until now, robots of this type have not been developed because they were simply very "stupid" so that they could not be used for complex tasks, but it seems that in the near future this is going to change.

Cognitive artificial intelligence systems

Research in the field of cognitive artificial intelligence systems has developed in recent years to huge proportions and contains a large number of subfields. Researchers from around the world have concentrated on different areas: machine vision, spatial understanding, interaction between people and machines, and many other areas.

All advanced in their field, but the European-funded CoSy project (Cognitive Systems for Cognitive Assistants) in which many universities from the continent take part, showed that combining researchers from different fields can lead to accelerated progress in the field.

CoSy project

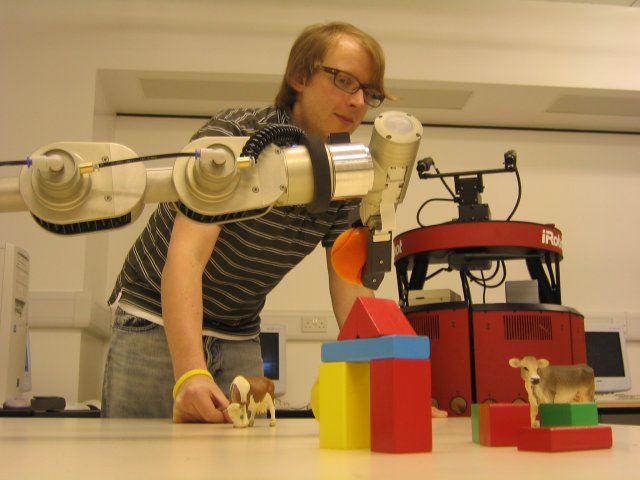

The CoSy project combined a large number of experts from diverse fields, as a result of the interdisciplinary collaboration an architecture was created that combines multi-cognitive functions that make robots more aware of their environment and communicate better with humans. The new architecture is much more successful than each part individually, since it brings together a large number of advanced cognitive artificial intelligence technologies. Instead of studying how each field works separately, the researchers put all the developments together into one system.

The toolkit of their architecture was released under an open source license since they are interested in encouraging further development in the field and already today sparks of development initiatives based on their development can be found.

overcome the integration challenge

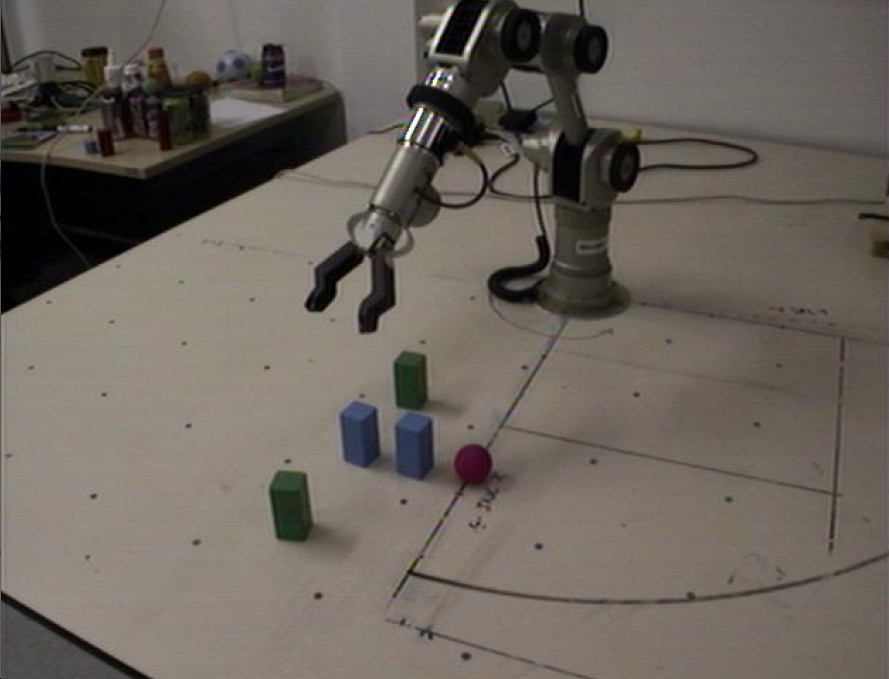

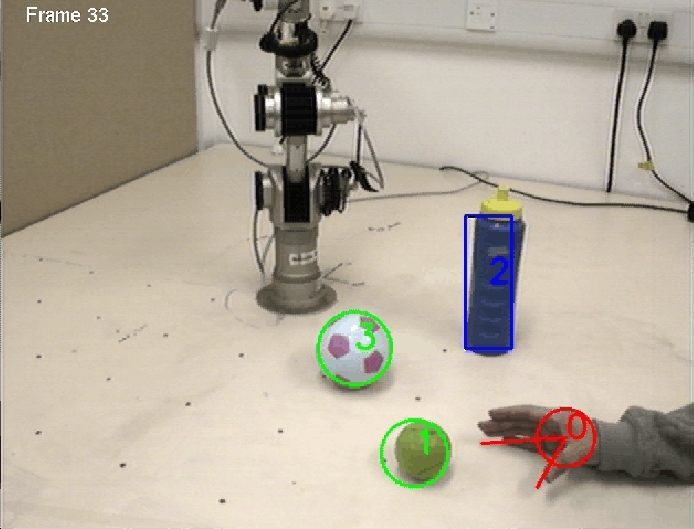

The biggest challenge in the field of robotics is the integration of different systems. The robot needs to be taught to understand its environment with the help of visual input and to communicate with humans with the help of voice commands while making logical connections of what is said in relation to the environment in which it is said - all these operations are terribly complicated and even more difficult to integrate them together in one system. Because of this complexity, most robots of the current generation are reactive robots. They simply react to the environment instead of acting independently in their environment.

The CoSy team has developed an environmentally aware robot called Explorer that is much more similar to human environmental understanding. Explorer can even talk to humans about things around him.

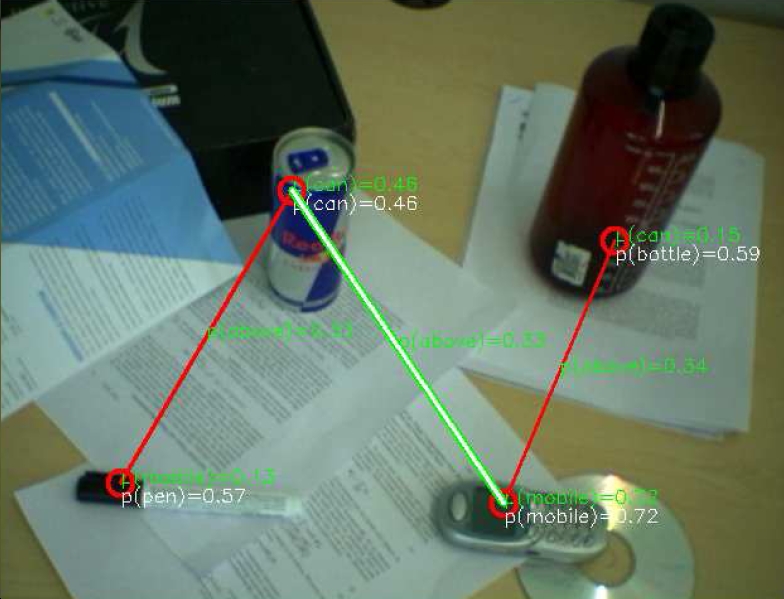

Instead of using only geometric information to create a map of the environment, Explorer also incorporates high-quality topographic information. By interacting with humans he can learn to recognize objects, spaces and their uses. For example, if he sees a coffee machine he can conclude that he is in the kitchen, if he sees a sofa he can conclude that he is in the living room. The robot treats rooms just like any normal human because it has a conceptual understanding.

The integration of the various systems is still the biggest obstacle facing researchers on the way to building more sophisticated robots that better imitate human vision and awareness.

According to the researchers, humans do not perceive how complex our bodies are, and we still do not know exactly how the brain decodes vision and interprets the input. When we see a coffee machine, a bottle or any other object, we recognize it, can understand what it does, how it works, when we will use it and in what context we will use it - all this is performed simultaneously. Performing this type of action in robots is a task that is far from our reach, but the current research brings us several steps closer to the goal.

Robotic servants

Fortunately, human intelligence and awareness is not required to create robots to serve as assistants. Already today robots from the previous editions are used by companies and individuals to perform simple tasks of "go get" or "go do". Hospitals use such robots to distribute medicines to patients, offices use robots to transfer documents between rooms, private individuals use them in the form of automatic vacuum cleaners that do the job independently of the operator, and even children are addicted to them as more and more toys with artificial intelligence are sold.

The use of the new architecture will increase the usability of machines of this type since their margin of error will decrease the more they understand their environment. In the future, robots will be developed for new uses that until now have been done manually and robots for areas where we never thought that robots could replace us.

2 תגובות

This is the right approach - different specializations in different fields and subfields and combining them in a smart or recursive way like in computer programming. It is desirable that each operation has its own processor or at least that they all be performed simultaneously.

This is true at a high level - recognition of speech, image, movement, thinking about an answer, etc.

And this is true at sub-levels, for example when you see a sofa, the first action is to divide the sofa into parts - backrest, cushions, legs, folds, or division according to contour lines, and send each part for decoding. And that first action will connect all the answers that will be formed and the understanding will be built (finally, an understanding will be built that this is an old couch with a ragged handle and a blanket on it...). The more fractions of a second pass, the better the understanding. And at any moment it will be possible to use this knowledge at a higher level of the robot's awareness.

This is the case at all levels and this is the understanding similar to human understanding. (at least that's what I think now)

By the way, at a certain stage components/processors will also work that will link information from all kinds of levels to create collaborations and intuition. According to statistics of level analyzes you can expect what will happen next)

DK

One day they will attack us