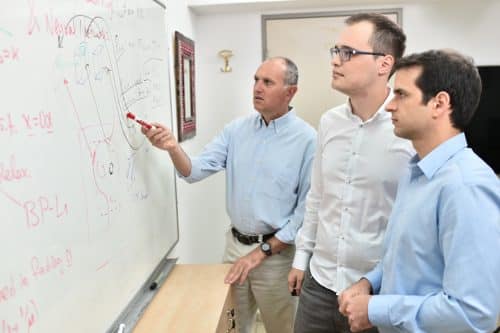

A theoretical breakthrough in the field of Deep Learning comes from the group of Prof. Michael Elad from the Faculty of Computer Science

A theoretical breakthrough presented in a new work by researchers from the Faculty of Computer Science may bring about a significant change in the field of deep learning. A series of articles published by this team presents an extensive theory that explains key aspects of the activity of multilayer neural networks, which are the platform of deep learning. The researchers are Prof. Michael Elad and the doctoral students Vardan Papian, Yaniv Romano and Jeremiah Solam.

The field of deep learning, which flourished in the 80s but froze due to challenging theoretical and practical obstacles, woke up in the last decade and now employs huge companies including Google, Facebook, Microsoft, LinkedIn, IBM and Mobileye. However, according to Prof. Elad, "While the experimental research was running amok and made surprising achievements, the theoretical analysis lagged behind and has not yet been able to catch up with the rapid development in the field. Now I am happy to announce that we have very significant results in this matter.

"We can say that so far we have been working with a black and closed box - the neuron networks. This box worked great but no one could pinpoint the reasons and conditions for its success. In our work, we were able to open the box, analyze it, theoretically explain the sources of its success and suggest ways to improve it."

layered neural networks

Layered neural networks, which are part of a wider field called deep learning, are an engineering approach that gives the computer a learning potential that brings it closer to human thinking. The consulting company Deloitte reports that this field, which is growing at a dizzying rate of 25% per year, is expected to generate 43 billion dollars in 2024.

Layered neural networks are systems that perform fast, efficient and accurate cataloging of data. These artificial systems somewhat resemble the human brain and, like it, consist of layers of neurons connected by synapses. The hierarchical structure of these networks allows them, among other things, to analyze complex information, and to identify patterns in this information. Their greatness is that they learn by themselves from examples, that is - if we feed them millions of tagged images of humans, cats, dogs and trees, they will be able to recognize the different categories (human, cat, dog, tree) in new images, and this with unprecedented levels of accuracy compared to approaches Advances in computational learning.

In the last decade, as mentioned, several important breakthroughs in this field have occurred, and systems based on layered neural networks have been able to read (with an accuracy of 99% or more) handwritten letters and identify emotions such as sadness, humor and anger in a given text. In November 2012, the chief research director at Microsoft, Rick Rashid, presented the simultaneous translation system developed by the company based on deep learning. In the lecture that took place in China, Rashid spoke in English, and his words went through a computerized transcription-translation-reading process, so that the Chinese students heard the lecture in real time and in their own language, with almost no errors.

Google did not sit idly by, and the system it developed (AlphaGo) managed to beat the world champion in the "Go" game. Even the young Facebook has already taken significant steps in the field, and the company's CEO Mark Zuckerberg has stated that his goal is to produce computer systems that will be better than humans in vision, hearing, language and thinking.

According to Prof. Elad, "Today, no one doubts that deep learning is a dramatic revolution in everything related to the processing and classification of huge amounts of information with high precision. But surprisingly, this enormous progress was not accompanied by a basic theoretical understanding that explains the source of the said networks' effectiveness. The theory, as in many other cases in the history of technology, lagged behind the action."

This is where Prof. Elad's group comes into the picture, presenting a new theoretical explanation for deep networks. "Many times in science we use mathematical models to help understand reality. If we think about it, the whole physical theory is built on this philosophy when it comes to describing the world through a collection of simple rules and equations. This is what we did too: we took a well-known realistic process (a layered neural network that digests information) and formulated a mathematical model for it. This model describes in a mathematical way the composition of the treated information - layers of sparse combinations of foundation stones (atoms). We have shown that our model leads to the conventional neural networks as a means of breaking down information into its elementary components. This allowed us to perform an analysis and get an accurate prediction of the network's performance. Contrary to the physics analogy, we can not only analyze and predict reality but also improve the systems we study, since they are under our control."

3 תגובות

Articles 4-6 on the site

The articles can be read on the website

http://www.cs.technion.ac.il/~elad/publications/journals/

They are not the type that creates a chain of reactions here. But they are of the type that nature journal publishes online and are interesting.

This is a breakthrough discovery in my opinion, by Michael Elad.

At the time he published a book called Sparse and Redundant Representations which deals with recovering a lot of information from a small amount of sampled information. This book is also important. So this is at least his second famous work.