How do we know if we can trust a car without a driver or an airplane without a pilot? A graduate of the Weizmann Institute developed a method for testing the reliability of software written by computers

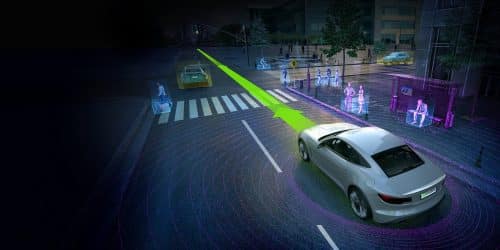

Our lives may depend on being able to check the calculations of our machines. Actions such as driving a car or flying a plane require a quick assessment of the situation and decision-making within a fraction of a second. However, it is possible that a day will come, and those who will "write" the sophisticated algorithms that make machines autonomous - for example, a car that will drive without a driver, a robot that will drive itself, or even a plane that will fly itself - will be computers. Moreover, often, this new type of software, required for particularly complex tasks, outperforms the software that humans are able to write. But can we put our trust in these programs?

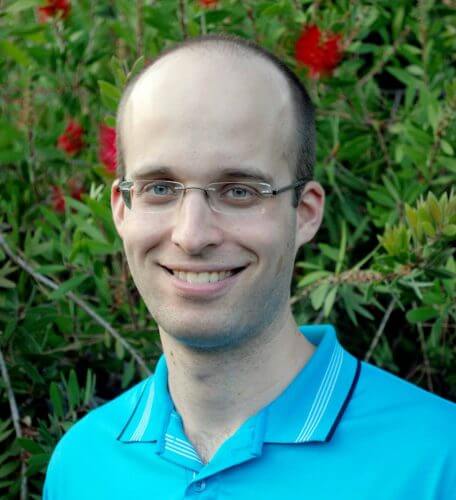

Dr. Guy Katz, a graduate of the Weizmann Institute who is about to join the faculty The School of Engineering and Computer Science named after Rachel and Salim Benin At the Hebrew University, he developed a method that can verify the degree of accuracy of certain programs "written" by machines. These are programs known as "deep neural networks", due to the similarity between their structure and that of nerve cells in the brain, and they are produced by way of "machine learning", which imitates the human learning process. "The problem is," explains Dr. Katz, who conducted his doctoral research in the laboratory of Prof. David Harel From the Department of Computer Science and Applied Mathematics, "that the software is a sort of black box".

A neural network mainly consists of many switches, each of which may be in one of two states - "on" or "off" (on/off). A program that has 300 switches has 2300 possible configurations. Ostensibly, in order to know if a software would work correctly every time, you had to test each of the possible configurations, and this is clearly a problem: how can you test something so complex? And not only that, but early tests of autonomous cars have already taught that unexpected events will always occur when driving in the real world.

Katz managed to reduce the number of configurations to be tested - from 2300 About a million. A million may sound like a lot, but this number is within the limits of a computer's computational capacity

In his post-doctoral research at Stanford University, Dr. Katz worked with software designed to prevent aircraft collisions developed by the Federal Aviation Authority in the United States, the FAA. He sought a way to reduce the number of possible configurations that needed to be tested by creating a method to determine which of the many possible situations could not actually occur. Together with Clark Barrett, David Dill, Kyle Julian and Michael Kokenderfer, all from Stanford University, Katz managed to reduce the number of configurations to be tested - from 2300 About a million. A million may sound like a lot, but this number is at the limits of a computer's computational capacity, while the total number of possibilities is far beyond the range of the most powerful computer.

When the research team tested the software being developed by the Federal Aviation Authority, in a process known as "verification", they found that all of the tested features passed the test, except for one, which was later fixed. An article describing these results will be published as part of the Computer Aided Verification conference. CAV) to be held this year.

Dr. Katz intends to continue researching means to verify the functionality of programs written using computer learning. Besides the Federal Aviation Authority, Dr. Katz and the team from Stanford are working with the "Intel" company on an autonomous vehicle project. This project, he says, is several degrees bigger than the one they have already completed: "Despite the commonality of all systems that use deep neural networks, there are also unique parts to each system. We work in close cooperation with the engineers and experts who develop these systems, in order to understand which features should be tested, and how the test can be accelerated. This field still requires human involvement."

See more on the subject on the science website: