This is what Gil Pratt, director of the Toyota Research Institute, said during a panel that dealt with the autonomous vehicle at the CES exhibition. A lack of effective methods for reliable testing may delay the entry of autonomous cars * Other speakers emphasized the need to provide a solution for an interim period that may last decades

The biggest barrier to robotic vehicles is humans. There was hardly a lecture by a keynote speaker from the industry at CES that did not feature an autonomous car. On the agenda is how the human factor changes the future of cars, and is the human-vehicle interface the weak link on the road to an automated vehicle?

Many companies that presented at the exhibition including Toyota and Mobilya, Hyundai, Tesla and many others presented their ideas. Partnership agreements between companies such as Nvidia and Mola Intel and Mobileye announced cooperation agreements with major car brands such as Audi, BMW, Mercedes Benz and Volvo, or with TIER 1 suppliers to the automotive industry such as Bosch, ZF and Delphi. A long line of high-tech companies, including Israeli companies, presented image sensor, LIDAR and radar technologies.

Founder, CTO and Chairman of Mobilay, Prof. Amnon Shashua said that the ability to teach vehicles human intuition will be the last piece of the autonomous driving puzzle. The overall message was clear: engineers can now make autonomous cars.

Gil Pratt, director of Toyota's research institute, took the stage at the company's press conference and asked, "How safe is safe enough?"

He pointed out that people show tolerance and have learned to put up with 35 thousand deaths from car accidents every year in the USA. But would they be willing to endure even half the carnage if it were caused by robotic vehicles?

"Emotionally, we don't think so," Pratt said. "People have zero tolerance for machine-caused deaths."

Many engineers in the automotive and high-tech industries have adopted the common assumption that autonomous cars are much safer than cars driven by human drivers.

But how does one really feel about machines that can drive but can also kill? If we add the human factor to the equation, it would be difficult to completely ignore this observation of Pratt's or even to dismiss it as an overly conservative statement.

Philip Magni, founder and consultant for Vision Systems Intelligence (VSI), said that the statement was pragmatic for a company like Toyota, since in the coming years cars will still be sold to real drivers, and they will want the safety and comfort.

Considering that it will take decades before robotic cars replace all manned cars. It is not enough to talk about robotic cars starting to enter the road in 2021. Now we have to talk about the reality where cars with a driver share the road with autonomous cars, not only in the coming years but in the coming decades.

Many car manufacturers, not just Toyota, may find it difficult to stick to the road map they set. Even among the car manufacturers with aggressive plans for the development of an autonomous car (for example, the launch of a level 4 autonomous car in 2021).

Last year, deep learning was the common answer to the most complex problem of fully autonomous driving. This is still true but deep learning is not a panacea.

The non-deterministic nature of neural networks has become a cause for concern for anyone required to test and verify the safety of autonomous cars.

Shashua pointed out that "among all the technologies that the company deals with (sensing, mapping) we find the development of a technology that we call DRIVING POLICY - driving policy is the missing piece in the puzzle of autonomous driving." Driving policy means using artificial intelligence to teach autonomous vehicles, for example, how to merge into traffic on roundabouts.

In conclusion, Magni from VSI said that this is a problem that will be difficult to solve. Roger Lanctot, co-director and director of the automotive division at the research company Strategy Analytics said that "building human behavior into software involves creating a black box that no one currently has any means of testing or verifying its safety. This solution may take ten years. Alternatively, the auto industry may bend some safety standards to accommodate a testing process for autonomous cars based on deep learning.”

Indeed, the companies are preparing for the interim period between driving aids and autonomous driving. Toyota envisions a future where "through biometric sensors throughout the car, Concept-I will be able to recognize what you feel. The information will be analyzed by the car's AI system. "If you feel sad, the AI will analyze your emotion and, if necessary, take over the driving and drive you safely to your destination and protect you."

Philip Magni, agreed that a driving policy is a difficult thing to achieve. Mobileye will have to solve it by working with its partners (Intel, Delphi). Mobilya does not have the hardware to support a driving policy, so it joins Intel and develops the software for BMW on an Itnel SOC. says Magni.

This poses a challenge for insurance companies such as Swiss Re (which presented at CES as one of NXP's partners). According to Lanctut, insurance companies will have to figure out how to fund software and silicon in cars to assess their safety.

This is of course further to the ethical issue - who is responsible in the event of an accident involving an autonomous vehicle. The passengers, the manufacturer? The component manufacturers?

"Supercomputer" for the car

NVIDIA is known as a developer of graphic processors (GPU) but these processors not only make it possible to play faster and more sophisticated computer games, but are also used in supercomputers alongside central processors, as accelerators for parallel processing.

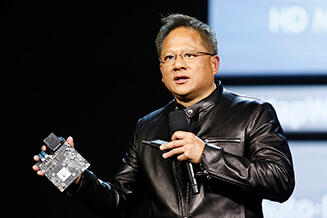

CEO NVIDIA Jen-Sun Huang was the keynote speaker at the opening event of the exhibition CES - The largest consumer electronics exhibition in the world, currently taking place in Las Vegas. According to him, one of the reasons for the rapid jump in the capabilities of the artificial intelligence systems is the connection between it and graphic processors in countless computing centers around the world that are able to process the mountains of data that come from the end devices - theIOT.

There is one field where the cloud is important, but not enough, and therefore these capabilities need to be brought to it - the automotive field. "When it comes to the computer driving an autonomous vehicle, any improvement in processing is important because it ultimately manifests itself in response time." Huang said.

Audi andnvidia Fees these days for the development of a level 4 autonomous car (which means, a car that can manage on its own, but still requires a driver who can take control of the car in an emergency. "We are jointly developing the car with the most advanced artificial intelligence by 2020".

The new platform is called DRIVE And it will use artificial intelligence and neural networks so that the car can drive itself. NVIDIA presented it in a test car that drove on California roads in December 2016.

Level 4 is the first level where a car can be considered fully autonomous. Level 4 was designed to perform all driving functions critical to the safety of the trip and monitor road conditions during the entire trip. At the exhibition, both companies presented vehicle 4X4 sample Q7 According to Huang, he learned to drive in 44 days, thanks to artificial intelligence

Even earlier, in the coming months, Audi will introduce the A8 – The first car in autonomy level 3, meaning it will be autonomous only part of the way and part of the time.

Huang also presented a plan of NVIDIA To become the Amazon of computer games, when the company will establish supercomputer centers around the world and instead of buying a game console, anyone from their personal computer will be able to access the cloud and play games of the same quality, and pay for the game time instead of purchasing it.

More of the topic in Hayadan:

9 תגובות

There is no feasibility for autonomous vehicles before there is software with human intelligence. Also man and machine will never get along on the road. And to solve this, huge investments in infrastructure will be needed and there is no one who can finance it. Also, an absolutely autonomous car will not be possible because the person will not give up the thrill of driving. (especially the young people)

ארי

I think this reinforces what I said. Accidents that are the fault of the "machine" cause much more noise. You remember much more details about this accident even though since then there have been several accidents with the same number of deaths (correct me if I'm wrong. At least it is so for me) In addition to this, this accident has the scariest element of helplessness.

Another reason for the greater impact of autonomous vehicle accidents is that the accident is going to be well documented (and we know that a picture is worth a thousand words)

post Scriptum.

The accident in question really raises the question of whether an autonomous vehicle will even have a chance to deal with such a new situation. A situation where a vehicle failure causes it to disobey the computer (brake or steering fault)

A few years ago, there was an accident that made headlines. An entire family was killed after the car's brakes failed

A few years ago, there was an accident that made headlines. An entire family was killed after the car's brakes failed

The best example of the past equivalent of the autonomous car is the elevator.

The first elevators were operated by a person who was "driving" he was responsible for the door opening and closing and no one would crush between the doors and also knew how to stop on every floor, over the years "autonomous" mechanical technologies were developed and this person was no longer needed, people were afraid "what would happen If the elevator closes its doors on someone or doesn't stop on the right floor, etc. The solution was to have a person in the elevator to press the button for your floor, a transitional period that got the cowards used to it.

So then and today people are afraid of progress, and even today we are in a period of transition, in the coming years people will stop being afraid, and let the machine improve their quality of life.

Human nature is to focus on the different so when there are many accidents from humans there is a tendency to put some kind of filter on it

On the other hand, a robotic accident is already something new that jumps into the headlines, in addition to that, the nature of robotic accidents is possible

will be different from the human accidents, some of which have an expression for the fears of the Terminator expressed in our science fiction films

One moment you are driving in a safe system according to the manufacturer and the next moment you are sitting in an emotionless terminator without a steering wheel or any ability to control that is hindered by a software bug driving on the pavement or just towards the abyss or straight into a Paul trailer for no reason,

It happens to humans who fall asleep and pass out drunk, we will see all kinds of strange things especially in the first stages of the automatic driving system,

Another issue is the fact that today the car manufacturer is responsible for the mechanical systems being manufactured properly if it was done correctly

He has no responsibility regarding accidents, this will not be the case when the manufacturer actually drives through the developed robotic system

And not the passenger, so the legal responsibility in the event of an accident will also be on the manufacturer, no wonder there are concerns about this issue

With the car manufacturers, from almost zero liability in an accident to almost full liability.

People also more readily accept death by car accidents than terrorism. (This is expressed in the level of anxiety, for example) In my opinion, at least one of the reasons is the feeling of lack of control. Regarding accidents, it is easy to delude ourselves that it is up to us. In how we behave and how careful we are, then it is easier to reassure ourselves "it won't happen to me". The feeling that an autonomous vehicle can have a malfunction and I have no control over it will be very difficult for people. It almost feels like Russian roulette. Even with this roulette with a smaller chance than the usual risk of driving on the road it will not change the feeling. People will agree to take much greater risks if they have the feeling that they have control over the magnitude of the chance of being killed or that a person they trust has.

was It's a fun fact that the chip was from Mobileye, who says that it is more difficult to insert artificial intelligence into a chip that will drive a car (as opposed to today when it is an auxiliary device for a human driver). Tesla severed ties with Mobileye and switched to NVIDIA, which announced its chip just now, and which probably hasn't even hit the market yet.

my father

A person has already been killed in a Tesla autonomous vehicle. There was no big noise.