The program "The Laboratory" (rebroadcast today 17/10/2006 at 16:00 on Channel 1) is dedicated to the field of eye and vision and, among other things, also to this research. Multidisciplinary studies carried out at several universities in the Munich area link the flying ability (and the analysis of the images) of the flies and robots

Common and clumsy looking, blow flies are artists in flight. They change their direction suddenly, stop in the air, spin around their axis at lightning speed, and make precise landings - all these maneuvers are made possible thanks to their extremely fast vision. This ability allows them to avoid losing direction when they are in a hurry to grab food. The question still arises, how does the tiny brain of the flies process the complexity of the images and signals at such a high speed and with amazing efficiency?

To get to the bottom of the issue, the members of the group of excellence known as Cognition for Technical Systems (CoTeSys) in Munich, Germany, created an extraordinary research environment - a flight simulator for flies. In this simulator they investigate what goes into the minds of the flies when they fly. Their goal is to provide these capabilities to humanity through the development of robots capable of grasping and touching and of course moving, while studying the environment in which they operate.

The mind of the fly makes unbelievable things possible. The animal deals with obstacles in fast flight, reacts within a fraction of a second to the person trying to catch it, and makes no mistake in navigating towards the smelly lumps that they treat for it. Researchers have known for a long time that flies are able to take in many more images per second than humans. To the human eye, more than 25 individual images per second will merge into continuous motion. A fly, on the other hand, can view a hundred images per second as individual scenes and intervene quickly enough to navigate and precisely determine its position in space.

However, the fly's brain is only slightly larger than the head of a pin, several orders of magnitude smaller for operations the way the human brain works, for example. It must have a simpler and more efficient way of processing images from the eye to visual perception, a topic of great interest to robot builders even today.

Robots have great difficulty grasping their surroundings through their cameras, and even more difficulty understanding what they see. Even detecting obstacles on their work surface takes a long time. Therefore, people are required to protect their automatic assistants, for example, by closing the area and separating the area where robots work from the areas where humans work. However, a direct way for supportive cooperation between humans and machines is a primary goal of the group of excellence known as Cognition for Technical Systems (CoTeSys).

As part of CoTeSys, neuroscience researchers from the Max Planck Institute for Neurobiology are investigating how the flies manage to navigate their environment and why their movement is so efficient. They built a flight simulator for flies under the leadership of the neurobiologist, Prof. Alexander Burst. On the simulator screen, the researchers presented the flies with a variety of patterns, movements and sensor stimulation and tested their reaction. The insect was held in place with electrodes so the researchers could analyze what was going on in the fly's brain as it buzzed and zigzagged around the room seen in the simulator image.

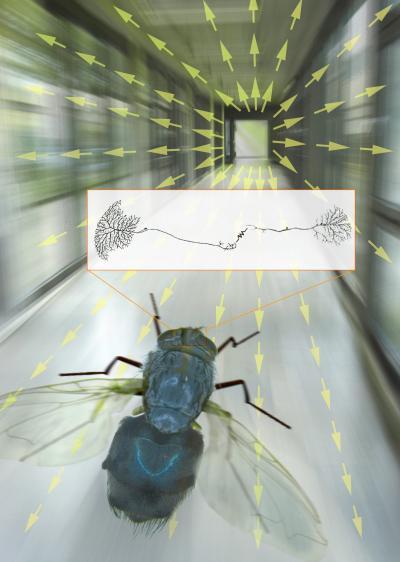

The first result showed one thing clearly: the flies process the image in a different way than the human brain processes visual signals. It turns out that their eyes lack the ability to move. Mainly, the scientists checked which commands the fly's brain transmits during spatial movements known as "optical flux fields". When moving forward, for example, when objects are running quickly to the sides, the objects in the background appear larger. Near and far objects appear to move at different rates. The first step for the fly is to build the model of these movements in its tiny mind.

The speed and direction in which the objects in front of the fly's eye appear to move moment by moment create a typical pattern of motion vectors called flux fields. In the second stage, this flux is estimated by what is known as the lobula plate, a higher level of the visual center in the brain. In each hemisphere of a fly's brain there are only 60 cells responsible for this activity. Each of them reacts with a unique intensity when presented with a suitable template. To analyze the flow field it is important that the movement information from both eyes arrive together. This occurs through the direct connection of specialized neurons called VS cells. In this way the fly receives accurate information about its position and movement.

Prof. Burst explains the importance of the research: "Through our results, the network of the VS cells in the fly's brain responsible for the rotational movement are the most recognized circuits in the nervous system." But still these efforts do not end in completely basic research. The discoveries of the neuroscientists at the Martinsried Institute are particularly interested in process-related engineering, under the guidance and control of the Technical University of Munich (TUM), with which Prof. Burst collaborates in the project.

Under the leadership of Prof. Martin Bos and Dr. Kuila Kuehlenz, researchers from TUM are working on developing intelligent machines that can observe the environment through the camera, learn from what they see, and react according to the current situation. The long-term goal is to enable the creation of intelligent machines that can communicate directly effectively and safely with humans. Even in factories, the safety boundaries between humans and robots must fall. This will only be achieved if there is a quick and efficient ability to analyze the images the robot sees.

For example, the TUM team is developing tiny flying robots whose position and movement during flight will be controlled by a computerized system for visual analysis, which is inspired by the brain of the fly. One mobile robot, Autonomous City Explorer (ACE) was challenged to find its way from the Marine Platz Institute in the heart of Munich - a distance of about 1.5 km - by stopping pedestrians whom it asked for directions. To do this, ACE had to translate the gestures made by people pointing the way, maneuver on the pavement and cross roads safely.

Increasing the natural communication between intelligent machines and humans is something that cannot be thought of without an effective image processing capability. The insight obtained from the flight simulator for flies increases the knowledge of researchers from a variety of disciplines - and offers an approach that will be simple enough to be portable from one place to another, from insects to robots.

to the notice of the researchers

To watch the program on the Israel Broadcasting Authority website

5 תגובות

Fascinating topic. The far-reaching options of quick decision-making in the wake of faster information and not due to faster processing can certainly change the chip development of any machine and not just robots dependent on video analysis/sensors.

The advanced robots developed today for production processes in factories, such as BMW, do not contain a situation-dependent response, but perform a series of actions based on the assumptions that the component is in a certain place and requires a certain treatment. The robot will not change its movement or insert an additional action required to fix an example position or God forbid return the component to the robot before it that did not finish the job properly. Also, there is no central processing system for each group of robots that has the ability to be aware that there is a malfunction, and any change of operations will prevent destructive work on a properly prepared component. The most common options are to activate an alarm, so that a human hand intervenes.

If such research yields practical results, the entire field of robotics will change.

I saw the article on TV. The topic was well presented and illustrated in the aforementioned article as well.

The main thing is not the therapist.

Unfortunately, this is an article that was translated into several sequels, as a result of constraints. I will try to rewrite it now. If you still find mistakes in about an hour, write to me and I'll fix it in the morning.

Why does the article look like it was automatically translated by Google or Babylon?

Avi Blizovsky, it says under the photo you are editing, did you proofread this masterpiece before it was published?

Incomplete sentences, a subject that changes in the middle of a sentence, or that changes its gender and more.