"We need a test equivalent to the Turing test in which a computer will be required for the entire range of human intelligence to pass a balanced test, without using natural language processing tricks so that we don't know if we are talking to a person or a machine," according to Kurzweil, Google's chief engineering officer

"We need a test equivalent to the Turing test in which a computer will be required for the entire range of human intelligence to pass a balanced test, without using natural language processing tricks so that we don't know if we are talking to a person or a machine," according to Kurzweil, Google's chief engineering officer

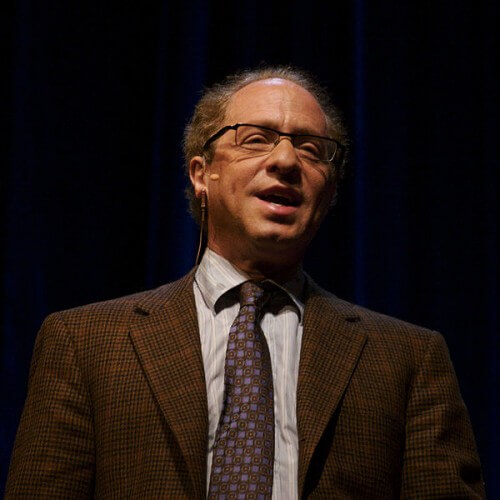

Ray Kurzweil is an American inventor, futurist and author, a pioneer in the fields of optical character recognition (OCR), voice recognition and other topics related to artificial intelligence, at least according to Wikipedia's definition. He is known to music lovers for the musical instruments he developed and the author of the book "The Age of Thinking Machines".

Kurzweil has been the head of the Singularity University in California since 2008 for the interdisciplinary study of cutting-edge technologies and their routing for the benefit of humanity with the support of Google and NASA, and in recent years he has been Google's chief engineering officer, and deals with the integration of machine learning and language processing in Google products.

About three months ago, Kurzweil lectured at a conference of the Council on Foreign Relations of the United States about his prediction that artificial intelligence is equivalent to human intelligence.

According to him, "I predict that we will have human-level artificial intelligence in 2029. At a conference held at Stanford in 1999, most researchers from the artificial intelligence department predicted that this would not happen for 100 years, and 25% said it would never happen."

"The consensus today of the AI experts is that we are getting close to that. I changed my opinion from negative to positive at a conference held in 2006 on the occasion of the 50th anniversary of the Dartmouth conference where John McCarthy and Marvin Minsky, who was my mentor for over 50 years, coined the term artificial intelligence.

"Let's take the cell phone in my pocket," Kurzweil said. "He does a lot of things better than me. At some point the phone will talk to me and ask me questions and talk to me like humans? When there will be technologies that will allow such conversations, me and my team at Google will use software that will read emails and understand the meaning of each email and then also respond to it on your behalf."

For this, he said, "we require a test equivalent to the Turing test. I believe that the Turing test is an acceptable test of the entire range of human intelligence. A computer will need this entire range to pass a balanced test, without using natural language processing tricks so that we don't know if we are talking to a person or a machine."

"I'm still an optimist, but not that much," Kurzweil explained, "however, there is a growing group of people who claim that I'm a conservative. I did not mention the law of accelerated return on investment. Not only has the hardware field advanced exponentially but so has the software, so I can be optimistic. In these years we have passed milestone after milestone in the field of artificial intelligence so that we can talk about the new techniques, and this is what makes me more confident and I believe that the AI community is gaining confidence that we are not far from this milestone."

Invasion of artificial intelligence from outer space

"Regulation will play an important role. It should be understood that this is not an invasion of artificial intelligence from outer space. This is something that people who work in technology companies develop."

According to Kurzweil, "Policy makers should think of artificial intelligence as a technological accelerator. They should focus on the ethics of AI, how to keep the technology safe. It's kind of a polarized discussion like many discussions today. I started talking about the promise as well as the dangers back in the 90s. I was there at the right time. In 2006, I wrote the book "The Singularity is Approaching" - in which chapter number eight talked about the deep promises versus the dangers of the development of artificial intelligence.

"Technology has always been a double-edged sword," he said. "The fire warmed us, allowed us to cook our food but also burned houses. These technologies are much more powerful and therefore the discussion should also be longer. On the one hand, this is an unprecedented opportunity to overcome poverty and age-old diseases and stop environmental degradation, but also a danger because these technologies can be destructive, and even cause existential risks."

"I think we need to act according to a moral imperative to continue the progress of these technologies, because despite the progress so far - people still fear the worst."

"Let's take another recent development as an example - biotechnology. 40 years ago at a conference of all the experts in the field in a place called Asilomar, they did not think that there would be a safe possibility to carry out biological programming that could cure diseases and stop aging."

Today, 40 years later, we see the clinical impact of biotechnology and are sure that there will be a flood of innovations in this field in the coming decade. Despite this, the number of people who were accidentally or intentionally harmed by these modern treatments aims to be zero thanks to the guidelines we created."

"Now we need to hold our first Asilomar AI conference that will deal with the ethics of artificial intelligence. The extreme demand to banish technology or slow it down is not a correct approach. It is necessary to hold a discussion even on complicated issues, so that the members of Congress know how to draft the right laws."

The solution is open source

Later, Prof. Kurzweil explained that the solution that will allow transparency for the decision makers and the public is open source. "Google's idea of making all its AI algorithms available to the public in open source is a good idea. The combination of open source and the law of the accelerated return will allow us to get closer to the goal. I think we can reach this goal through a combination of open source and continued exponential progress in both hardware and software."

12 תגובות

Wow - no one has a clue what he's talking about!

The first thing - "the consensus today of the AI experts is that we are getting close to it [human-level intelligence]". Here are quotes from Google - the same company where Kurzweil works (!)

"It seems we're nowhere near artificial general intelligence (AGI), or machines that equal or surpass humans in our ability to think, process, predict, examine, and learn."

"We haven't even scratched the surface. So to me, it's really just a leap too far to imagine that, having finally cracked pattern recognition after decades of trying, that we would then be on the verge of artificial general intelligence."

Second thing - Kurzweil talks about open source for AI algorithms. Mmmm .... Maybe someone should tell the "genius" that AI is not based on algorithms?

Third thing - all the Turing test can show is the inability of humans to distinguish between man and machine.

Intelligence is the ability to make decisions in the absence of all data. How does this relate to the Turing test?

I didn't know that scientists give a platform to charlatans and leaders of esoteric cults

I don't believe that someone who understands anything listens to this delusional person - so according to his claim, a general AI at the level of a human being and more will be introduced according to the optimistic predictions 3 years before an autonomous car will be something that the regulator can give the OK (assuming that by then they will succeed in teaching the AI the difference between an open road and a sign advertisement on a truck)

With all due respect to the one who once published an article in the field, he no longer sounds like a scientist but like a charlatan who will do anything for a little more heart node

The test is a comparison with different types of interest.

First they will be used with poor animals, then with more developed ones, and finally with primates and the like.

Ask neuroscientists how far they are from understanding the brain of a B.A.H.

It appears that Ray Kurzweil is not basing his claims about these daydreams on testable predictions

In a form of longitudinal progress against the more accepted concept which is linear, until today quite impressively Ray Kurzweil's predictions about the technological progress of artificial intelligence were more accurate than the more common linear concept,

So a system inventor who was previously considered delusional and eccentric today is treated much more seriously with his claims, you can take his books and check what he predicted and what happened in reality, this does not mean of course that it will continue at the rate he predicts, there may be a slowdown in technological progress for example due to the inability to make the microprocessor smaller even though This is not the only thing that promotes artificial intelligence, today the progress is much more in the architecture of the software and hardware,

Since we cannot currently define how exactly the inner feeling of emotions takes place in the biological system, feelings of pain, love, etc. It is quite possible that it will be very difficult to produce such a thing in artificial intelligence, meaning that there is a barrier to accomplishing such a thing that we do not know, but it is worth remembering that artificial intelligence does not need feelings, feelings as a motive for carrying out actions,

The motive can be different even with zero emotion, she is already beating us in the games that were considered the best developed by the human race, of course the way we were defeated in those games we declared them not as the real test but it is quite clear

that as soon as hardware and software with the developing architectures will lead to systems that leave us in the dust behind

In many fields that we consider human, including even different fields of art, they may have to continue to receive human feedback whether it is direct or from an investigation of the Internet networks. In addition, they may have an internal model of a person, a type of simulation through which they will receive the feedback even without any internal feeling, there is a huge difference In a system when the base unit works at about 3 gigahertz versus 200 hertz, let's say they create something that is a kind of simulation of a person/scientist but he thinks 15 million times faster than us

We seem to him like a kind of snail plant, the signs can be seen on our cell phone that even an amoeba is a creature full of emotions in front of him, but he is able to remember a phone book in seconds perfectly, play chess and beat almost every person on the globe and all this with something the weight of a mouse, this is not just intelligence artificial is something that is superhuman,

For example, also Alpha Go Zero, which within hours makes it possible to learn a game that is at the top of human ability and is based on thousands of years of developing human thinking, and to win everyone includes the software Alpha Go that preceded it, which also surpasses every person,

In addition, this is a system that changes the internal architecture (nowadays by people) at rates of years compared to tens of thousands of years of biological systems, so that the forecast of a few decades from now we will see systems that are equal and superior in the ability to process information compared to humans (perhaps minus emotions) is certainly reasonable ,

All of this will happen so that the troublesome AI that is already defeating man narrowly will become GAI and this will probably happen in the next decades.

I believe like Hawking that successful artificial intelligence is a danger to us. First the oligarchs will use it to hire more and more workers. Basically, they are slaves of a historical process that replaces human civilization with artificial civilization. After that artificial intelligence will replace us. We will be redundant and all the other animals.

Keep on daydreaming, maybe there will be artificial intelligence at the level of a human being in a certain field, but it doesn't seem to me that in the next thirty years they will be able to create a robot that will have the level of intelligence of a human, including a feeling of love and a developing ability to understand the environment

The road is still very, very far

He is the director of engineering at Google - if he says something - believe me he knows what he is talking about - this is a man who should be listened to.

And by the way "A" what predictions have not come true since you were a child? If I may ask

Lorem

To this day, I have not seen a forecast for a technological future that is too pessimistic for 30 years ahead. On the contrary, the earlier the forecast was, the further it was from reality towards excess optimism (that is, I do not consider predictions of catastrophe from technological development) When I was a child, we had encyclopedias about space at home, which today have been written for much more than 30 years and for sure all the predictions for their future are exaggerated.

While it is true that ten-year forecasts tend to prove too optimistic, the point is that thirty-year forecasts tend to be too pessimistic and in fact completely miss the revolution that has taken place in most of its consequences.

Software can pass the Turing test and still be far from the understanding of a human being.

Not only the Turing test will indicate human-level intelligence.

I think he knows very well that what he says is simply not true.

Regardless of his exaggerations, predictions of a decade or two ahead are always exaggerated. The myth of permanent longitudinal growth is also not true. And if you really think about it, it doesn't make sense either. Nothing can multiply itself forever. In fact, it is more correct to say that in science and technology, specific fields accelerate exponentially for a limited time.

All the future predictions I have read in my life (for more than a decade) have always turned out to be overly optimistic at best or completely delusional after a while.

My prediction for another 10 years. The computers will be better but the gap between them and today's computers will be smaller than today's computers and those from 10 years ago. The cars will not fly and far less than half of them will be electric. New cars will have autopilot only on the simple parts of the road. Royal intelligence will improve and still maintain a distance of light years from human intelligence.