How do chatty bots fool us? What will happen in 2045? The Bina section indicates a hundred - and separates

In June 2002 I started writing in the journal "Galileo” the series of columns on artificial intelligence And in this column - the February 2011 issue - I say goodbye to writing this column and its readers. After about a hundred sections (in two issues the column was not published, but there were issues in which, in addition to this column, I also published articles on research in the fields of computer science and artificial intelligence), I would like to turn to other pursuits, and among them delve a little more in my small area of specialization in artificial intelligence. In this final column, I hope I'll be forgiven if I err on the side of nostalgia and return to some of the topics covered in the section to review the changes and developments that have taken place in artificial intelligence.

I am grateful to the editors of "Galileo" who provided accommodation for my writing and helped to improve the content and style of the articles. Thanks to the first reader of each column, my wife Nechama, who contributed her patience, comments and her rich knowledge of psychology. Thanks to the readers, without whose comments and reactions I would not have persevered in writing until now, and a special thanks to the researchers of artificial intelligence and its developers, who from the wide river of their ideas and innovations have extracted topics for writing.

And finally: at the end of this section I will explain why it is difficult to predict the future in the field of artificial intelligence. And who knows, maybe there will also be developments that will lead to meetings between me and the readers of the section in the future.

"Stop us if you've heard it before: in the near future we will be able to build machines that learn, use logic and even emotions to solve problems, the way people do it": this is how an article published by Massimiliano Versace and Ben Chandler (Versace, Chandler, and see link at the end of the column), scientists from Boston University.

The article, published in December 2010 in the prestigious technology magazine IEEE Spectrum, explains why a new type of electronic component makes it possible for the first time to effectively mimic the operation of the human brain. This new type is called "memristor" (Memristor - a combination of the English words "memory" and "resistor"). The theoretical possibility of the existence of such a component was predicted as early as 1971, but only in 2008 did engineers from the HP company manage to create such components in the sizes typical of modern electronics: about 50 nanometers, which means that 20,000 such components can be compressed into one millimeter; Two years later, the size was already reduced to three nanometers.

On behalf of DARPA (DARPA, the American Defense Advanced Research Projects Agency), Versace and Chandler are working with the HP company to build electrical components whose structure mimics the structure of the brain. Such imitation is not new in itself, and uses of supercomputers have already been mentioned in this column before and unique architectures to create visualizations for certain parts of the brain. The new article claims that these uses are possible in principle but not practical because the traditional separation between calculation and memory requires a huge waste of energy: every time a data is required for some calculation, the data must be retrieved from the memory and sent to the calculation unit. Of course, the calculation results must also be saved, so they are sent to the memory unit. The meristor unites the two functions in a tiny component, and therefore heralds the possibility of imitating the structure of the brain in a machine that will not differ much from the human brain in terms of its size and the energy it needs. For comparison, the RoadRunner computer that was used to simulate about one percent of the human brain consumes about three megawatts of power, as much as several thousand average families consume.

Electronic components, however innovative they may be, still do not constitute a thinking brain. The authors of the article present their plans to use the new hardware components and integrate the software they will develop to create "artificial beings" that will learn by themselves how to deal with dynamic and diverse environments. The ambition is to reach cognitive abilities that characterize the brains of mammals.

Will the researchers from Boston succeed in meeting their ambitious goals? They are well aware of past failures, but are still determined to succeed. Let's also mention that the agency funding the project was (under its former name, ARPA) the one that initiated the research that led to today's Internet. Not every research project supported by DARPA is so successful, but it is not excessive to expect that even if the new components and software do not lead to an intelligent computer, they will teach us a lot and enable innovative uses of the software.

Is the dream possible? Is he desirable?

As the opening of the article shows, its authors are well aware that such promises have already been made in the past and that artificial intelligence has failed to fulfill the promises. When the general public began to hear about computers, in the fifties of the last century, the media referred to them as "electronic minds", and an expectation was created that within a decade or two it would be possible to talk to such an "electronic mind" as if it were a person. The expectations did not stem from a misunderstanding on the part of the man in the street: many scientists did believe in this, and they continued to believe in the same dream even when every decade the target date was moved forward by another decade or two.

For anyone who believes that it is possible to explain human thinking, emotions and creativity without resorting to spiritual or metaphysical arguments, the realization of the dream is only a matter of time. The failures of the early hopes were not in vain: from each disappointment we learned a lot about the amazing capabilities of the brain - even a simple brain like that of a fly - and that the challenge is much greater than we initially imagined. Those lessons were used to create many useful technologies (more on that later), and contributed to scientific developments in brain research.

Others do not believe at all, for philosophical or religious reasons, that true artificial intelligence is possible. This group may not only benefit from the technological products of artificial intelligence research, but also be encouraged by each decade that passes without the fulfillment of those ancient promises.

For a third group it is not a dream but a nightmare. The science fiction writer and futurist Vernor Vinge, although he does not agree with this view, articulates well one of the central arguments: once a computer reaches a human level of intelligence, it will also be able to reach higher levels, initially by speeding up the calculation or adding hardware and later on By improving the design of the following computers. The result is a process that accelerates rapidly until the appearance of super-intelligent machines, which in some cases transcends to levels unimaginable for us. Some think that at that moment the judgment of the human race will be sealed. As we will see later, this is the argument underlying the idea of the singularity, but Vinge and his partners in the idea see it not as the destruction of humanity but its salvation.

Consequences for body and mind

The fear of thinking machines that will rise up against their creators, which the author Isaac Asimov called the "Frankenstein complex", is ancient: when the Swiss watchmaker Pierre Jacques-Droz introduced an automaton (mechanical doll) in Spain at the end of the 18th century who wrote the phrase "I don't think, therefore I don't exist?", the Spanish Inquisition imprisoned the inventor on the suspicion that he was possessed by a demon (by the way, the robot invented by Jacques-Dreau has been well preserved and it exists and works to this day). As in the legend of the Golem from Prague two hundred years earlier, the existence of a machine imitating human behavior was inconceivable without the assumption that it was operated by a supernatural force.

As mentioned, expressions of those fears also appear today: not only fear of a direct threat to human existence, but also fear of what machines may teach us about ourselves. Even one of the most eloquent representatives of artificial intelligence and of seeing the brain as a complex machine, Prof. Douglas Hofstadter, expressed a strong sense of discomfort when listening to the works of a composition software called "Emily Howell". In the book edited by him and the developer of the software, Prof. David Cope (see link at the end of the column), Hofstadter admits that listening bothered him, that "things that touch me at the deepest level - and especially pieces of music, which I have always treated as honest messages from one soul to another - may be created Effectively by mechanisms that are thousands or millions of times simpler than the complex biological mechanisms from which the human soul grows."

Koop himself, with an uncompromising materialistic approach, sums up his opinion in blunt simplicity: we humans are controlled by our bodies and find it difficult to control them as our intellect would like, such as when we consume more delicious food than we planned to consume. Hence, according to Kup, we are much more robotic than we think: "The question is not whether computers have a soul, but whether humans have a soul."

How do we know if the dream - or the nightmare - has come true?

The debates about the possibility of emotions and creativity in a machine built by humans constitute an extensive platform for philosophical discussions about the essence of consciousness and the connection between body and mind. The developments in artificial intelligence provide arguments for philosophers and thinkers from all sides of the debate, thereby enriching the discussion and sharpening the question of what distinguishes us as living and thinking beings.

In a more practical approach, one must ask whether we can even agree if a machine has reached the level of human intelligence? Suppose someone were to present a machine to us and claim that it understands Hebrew (of course, we could equally expect the machine itself to invite us to a conversation...) How would we test this claim? The field of artificial intelligence offered several answers to this, starting with the famous "Turing test", which was defined by Alan Turing in 1950. One version of this test requires that a human judge conducting written conversations with a human and a machine cannot decide which is the human and which is the machine. Unfortunately, Turing's claims are often completely misinterpreted, both by philosophers and by software developers who aspire to declare success in the same test. A prominent example of misinterpretation is, in my opinion, a competition devised in 1990 by Hugh Loebner, an American inventor and industrialist, inviting software developers to submit their developments to a similar test. Although many in the artificial intelligence community prefer to ignore - rightfully so, in my opinion - the competition and its competitors, it is interesting to examine the tactics used by the software developers competing for the prize: dodging questions, changing the subject, attacking, long and winding answers, etc. If indeed a software that behaves like this can be convinced that it is human, we will be forced to draw depressing conclusions about the nature of humanity.

Criticism of the competition did not prevent the press from reporting at the end of October 2010 on an impressive achievement by one of the competing software, which managed to deceive a human judge in a long conversation about free topics, in contrast to previous years when the conversation time was limited, and to the first competitions where the topics of the conversation were also limited. A brief examination proved that there was no justification for the enthusiasm in the media: the winning software benefited from the fact that the lottery placed in front of it in one of the rounds a person who for some reason had a confusing and evasive conversation with the judge. Remember, the judge must decide which of the two is the person and which is the machine. The judge was misled by the man's odd behavior, with the software in front of him using the usual tricks to evade answers in a fluid if somewhat hostile manner.

Fortunately, most of the developers of the "chatbots" (chatbots) do not do this to win the Lubner Award but for the purpose of using customer service such as in airlines, clinics, etc. These technologies, which combine spoken language decoding, information retrieval and decision-making, are already accessible today in computers installed in cars, in answering customer service calls, on Internet trading sites and in applications installed on smart phones. Usually, those chatty robots manage to meet the need for which they were developed, and even improve with each new version, but when they fail the results can be funny, annoying and maybe even harmful: the potential for harm comes from the human user's tendency to make mistakes due to the software speaking its language and attributing to it abilities it does not possess : Judgment, "common sense" and identifying unusual cases. Therefore, in cases that the software developers did not think about in advance, a misleading answer by the software may lead to frustration and loss of time and money.

Meeting human tasks, not necessarily in a human way

In the columns of this section, I tried not to define what artificial intelligence actually is, and I did not limit the topics only to cases where it is likely that everyone would agree that the computer indeed exhibits some degree of intelligence. The reason for this is simple: many times in the history of artificial intelligence, it was faced with challenges that seemed to require "real" intelligence, but after software was developed that met those challenges, that software was perceived as operating in a simplistic manner, according to mechanical laws that have no spark of intelligence, and therefore does not warrant recognition as intelligent. The most prominent example of this is the game of chess: for most of us, human chess champions are an example of the heights to which human intelligence can reach (at least as far as their achievements in chess are concerned), but the fact that today chess software reaches the same heights does not convince us that we have already succeeded in building an intelligent computer. Prof. Kopp tells of a similar case: a music lover who was present at the concert, not knowing that the "composer" was the software written by Kopp, was deeply moved by the piece, but about six months later, after discovering the source of the piece, claimed that he could clearly hear the boring mechanics of a process The computerized composition.

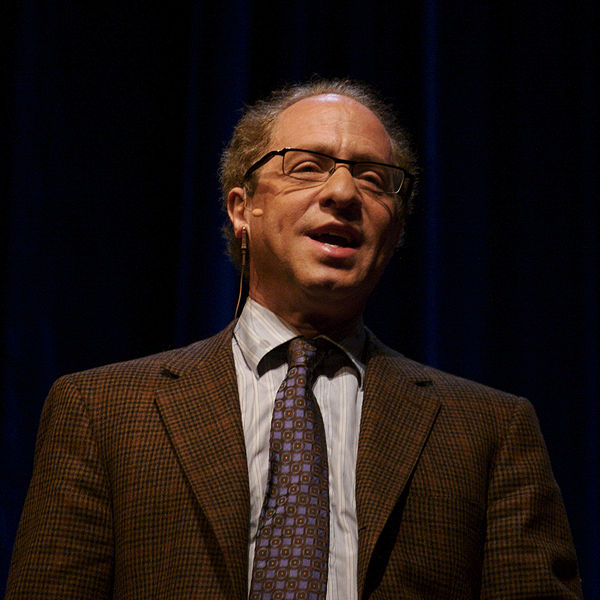

Marvin Minsky, one of the pioneers of artificial intelligence, saw this already in the sixties: "Artificial intelligence is the study of those computing problems that have not yet been solved." Ray Kurzweil (Ray Kurzweil) is a futurist, inventor and artificial intelligence researcher who is credited with breakthroughs in many fields that include computers that recognize and read aloud written text, composing and performing music using a computer, and understanding speech. Kurzweil calls this definition "the boundary has moved", and points out its uniqueness, by comparing it to other fields: "A technique of inserting genes from one species into the genome of another species does not cease to be part of bioengineering once it is perfected." The peculiarity seems to lie in our tendency to stop seeing intellectual brilliance wherever we can understand the technique by which it was achieved. As Alan Turing wrote already in 1947, "for the same object it is possible that one person will consider it intelligent and another person not; The second person is the one who discovered the laws of behavior of the object." I can think of only one other area where a similar uniqueness exists: the work of magicians, who do not explain how the magic was performed to avoid disappointing viewers.

Therefore there is a practical reason to sometimes avoid the big questions of the meaning of intelligence, and observe the impressive achievements of software and robotics. In the last decade, we have seen great progress in the ability of autonomous vehicles to navigate along long routes and in urban environments (in a series of competitions that were also funded by DARPA); Robots that move in different ways - walking, crawling, climbing, jumping and more - and even dance ballet and hip-hop; software that helps protect nature and endangered animals; Automatic translation tools that, although imperfect, may save human lives by mediating between rescue forces and disaster victims; tools for fighting crime and defending against terrorists; Robots that help perform daily tasks at home or in the workplace; Supporting scientists in planning and conducting experiments, predicting protein structures and economic and social analyses; achievements in various games, from billiards to poker; And many other areas in which this section has dealt over the years.

Although these developments deal with very different fields, they have a common denominator: the attempt to free people from dangerous or repetitive tasks, thus making life easier or solving problems for which the existing manpower is insufficient. A prominent example of this is the growing need to care for the elderly population: here, technology is in a race against time, to be able to create robotic caregivers before we find out that there are not enough young people left who are able and willing to do these jobs.

Another common denominator is the emphasis on the result and not necessarily on the way: if the software succeeds in its task, it is not important if it did it the way a person would perform the same task. Furthermore, if the machine is not independent but needs human guidance but still provides services that the same human guide needs, this is not "cheating" but a good solution for a real need. In other words, even when we have not been able to overcome those differences between the machine and the human that cause doubt as to the chances of machines ever becoming intelligent, the cooperation between man and machine may still be fruitful and effective precisely because each of them contributes unique and different abilities that complement each other. Thus, for example, there are now tools for filtering and blocking spam that work faster - and possibly with greater accuracy - than any human assistant. These tools use methods and information that are impractical for human intelligence (complex statistical calculations on huge amounts of information collected from all users), but do not claim to have any understanding of the content of the mail they block or transmit. The same goes for automatic translation: even the best translation software is far from reaching the quality and accuracy of human translators for texts that are not limited to topics and limited vocabulary; But human translators use translation software to speed up their work and make it more efficient.

Artificial intelligence in every computer and every palm

Looking back, one of the most prominent differences in artificial intelligence between the beginning of the first decade of the twenty-first century and its end is the accessibility of artificial intelligence technologies, especially in everything related to imitating the senses and human communication: decoding images and videos, facial recognition, verbal communication in speech and text. In almost all of these the computer is still far from human ability (although under certain conditions, such as face recognition in reasonable quality photographs, the computer may already prevail over the human), but in the last decade it has become useful enough for a wide variety of needs. Furthermore, such uses are already available for every computer and even for "smart phones": photo management software catalogs the photos according to the people who appear in them; English-speaking videos on YouTube have an automatic transcript attached, and it is even translated into other languages; Applications for iPhone and Android devices allow you to take a picture of signs in a foreign language and receive a translation into the user's language, or carry out spoken instructions (such as "Reserve two places at a quiet restaurant within walking distance of my house for tomorrow at eight in the evening"); And other apps identify the people captured by the phone's camera lens and attach details about them to the photo, such as the last status they posted on Facebook. The gap between the artificial intelligence labs and the common user has never been smaller.

In addition to the contribution that the developers of the smart software give to millions of users on the Internet, they also receive valuable contributions: users volunteer their time and abilities to teach the computer to recognize objects, classify and identify music, talk, cooperate in finding the spatial structure of proteins, and more. Even without direct cooperation, the large amount of information generated from the incessant conversations on social networks constitutes a "mine" in which software digs to extract information for marketers, epidemiologists, sociologists and linguists.

On the way to the singularity

In artificial intelligence, as in the branches of science and technology on which it is based, the pace of progress is increasing. It is a process that feeds itself and creates positive feedback: artificial intelligence adopts methods and ideas from computer science and draws inspiration from life sciences, such as the way in which nerve cells are linked together and work (as was done in the study from Boston University that we started); the social function of ant colonies which causes the colony to have its own "character" that is preserved even when the ants die and new ones hatch; the impressive learning capacity of the immune system in mammals; And of course the process of natural selection, the engine that led to the wonderful complexity of all of these. This is how new software and robots are created that, while cooperating with human intelligence, accelerate the pace of scientific development in a variety of ways: examining hypotheses and creating new hypotheses, planning experiments and devices, analyzing the mountains of information collected in experiments, and even self-planning a new and improved generation of software and robots.

These positive feedback processes are not only the property of artificial intelligence, of course, but artificial intelligence can no longer be separated as a separate field of research or technology: it is an organic part of a growing number of projects and studies, even those that are not identified as close to it. Many futurists who try to predict the results of the ever-accelerating pace of developments come to the conclusion that it leads to the "singularity": a future point in time when computers will achieve intellectual abilities considerably higher than those of humans, and will continue to improve themselves beyond any human imagination. Because of this the world will change in a radical way that we, living before the point of singularity, are not able to predict. Kurzweil predicts that the singularity will arrive in 2045, and will sweep humans along with it so that the differences between man and machine will be erased. If Kurzweil and his friends are right, artificial intelligence will allow us to fully fulfill the ancient commandment that was inscribed on the oracle temple of the god Apollo in Delphi: "Know thyself", by contributing to the understanding of the thoughts and feelings that make us human, only a short time before it changes forever the meanings of of intelligence and of humanity.

Israel Benjamin Works at ClickSoftware developing advanced optimization methods.

The full article was published in the magazine Galileo, February 2011

4 תגובות

There is no such thing as a royal intelligence for the world until there is knowledge of every subject, whether the mind or medicine or weapons and more,,,,,,,,,,,,,, the royal intelligence or intelligence is found in those who possess knowledge in one or another field, those who issue The knowledge from power to action is the intelligence or for the sake of the purpose royal intelligence and we have the option of choosing what we want to develop according to our needs or what purpose the development should serve this requires a lot of brakes and moral codes that not everyone has.

If the same computer learns from the environment to develop abilities, we will have to redefine the concept of artificial so that it is called artificial intelligence

http://www.tapuz.co.il/Forums2008/ViewMsg.aspx?ForumId=716&MessageId=152178724&r=1