"The technology knows how to take a collection of characteristics provided by the user and which make it possible to divide the video into the scenes that make it up," said Daniel Rothman, a researcher in the field of video analytics at the IBM Research Laboratory in Haifa

The IBM research laboratory in Haifa recently developed a system that takes video films, analyzes them and divides them into scenes. The system was presented at a conference held this week by the company at its research laboratory in Haifa.

The event brought together experts from academia, IBM laboratories and the departments at the Blue Giant that provide cognitive computing services.

According to Tal Drori, director of the multimedia analysis department at the IBM research laboratory in Haifa, this is the second year in a row that the conference dealt with machine vision, and this time the focus was on video technology.

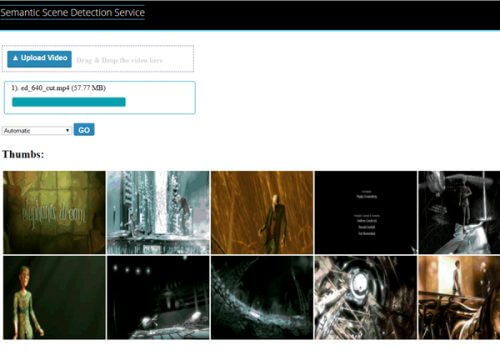

Daniel Rothman, a researcher in the field of video analytics in this laboratory, described the mechanism of dividing the video clip into the scenes that make it up. "Here, in the laboratory in Haifa, we have developed the technology that knows how to take a collection of characteristics provided by the user and that allow the video to be divided into the scenes that make it up, according to those characteristics."

He said that "the software is smart enough to 'watch' every movie and understand what was in it." Whether it's a TED talk, which is a simple example, or much more complex scenes in movies. For example, a scene in a Hollywood movie, the visual information is not the same throughout. When you shoot one actor and then a second actor while they are having a dialogue, the photos are obviously completely different but it is still the same scene. Then something completely different can happen and the goal of the algorithm is the ability to use the information to make a semantic division of the content."

Drori noted that "dividing the video into different scenes can be used as a building block for many things - searching for scenes, skipping between them and more. There are many algorithms that analyze video and when it is heterogeneous, they run into problems. A system that knows how to give them a homogeneous segment makes the classification easier."

Rothman demonstrated his words with a long video clip that describes a vacation, where in part of it the people travel in the desert, in another part in the sea and in the third - in the forest. "When you divide the film into different scenes, it's easier to characterize," he said.

Applications that video analytics enable

One of the speakers at the event was Balge Jacques, head of the business intelligence division at IBM's cloud video services. He described how performing video analyzes using the cloud enables the development of smart applications, which will provide, for example, the possibility of searching for relevant content in videos.

Jakages' department was created from two IBM acquisitions that were made in two consecutive months - of Clearapp, which was purchased last December, and of Ustream, which was made in January and from which he came to the blue giant.

Jacques described applications being built within IBM, which can automatically recognize scenes using cognitive computing, and sample the audience's attitude to what is shown in the video.

Dror Porat from the IBM Laboratory in Haifa, who was among the organizers of the conference, said that "as Watson's cognitive and cloud computing capabilities increase, they can be utilized to gain more insights into data that until now has been a kind of black hole for us - the video data. At IBM's Wow conference, which took place about two weeks ago in Las Vegas, many of the speakers mentioned that video is the fastest growing field on the Internet and in about five years will make up about 80% of network traffic. However, the quality of the search in the video still depends mainly on the data provided by those who upload it, which in many cases provide laconic data and in other cases do not provide any data at all."

Porat mentioned another development of the IBM laboratories in Haifa that is based on cognitive computing and that was presented at the conference - augmented reality glasses, which make it possible to recognize objects. "Among the interesting applications for this development: a technician who wears smart glasses and looks at a machine or device that he needs to service, and immediately sees on the field of vision an augmentation of data, which can guide him step by step on how to service the device. For example, arrows that show him which part of the machine needs to be repaired and how he should do it," he said.

IBM unveiled cloud services for video recognition, analysis and personalization

The new services, which are based on Watson, allow organizations to extract insights from video content, in order to segment the audience and personalize the viewing experience of each customer

IBM presented new services in the field of video technology, which are based on its cognitive computing system, Watson, at the WOW (World Of Watson) conference, held recently in Las Vegas. The services allow organizations to derive insights from the video content available to them, in order to segment the viewing audience and customize the viewing experience of each customer.

The amount of video content available on the Internet is constantly increasing, but the vast majority of this content is not accessible for search using traditional tools, due to the unstructured nature of video content, which makes development, processing and search processes difficult. Many companies store video content for marketing, documentation and training purposes, but they are not able to offer the appropriate content to the appropriate knowledge audience. Cognitive technology is the next critical step for data mining and analysis of the complex content contained in video, which will allow companies and organizations to better understand this content and offer consumers the content that interests them.

The new services, which can be accessed through IBM's cloud, analyze videos that until now required manual handling. They enable real-time analysis of events in the film. The services combine Watson's application programming interfaces (API) with IBM's video streaming technology, in order to track comments and videos on social networks in near real time, by analyzing the content uploaded to them.

The video scene recognition service automatically segments the movie into separate scenes, in a way that optimizes the processes of locating and delivering targeted content. Experiments conducted by IBM make use of cognitive technologies capable of understanding semantics and language and image patterns, for the purpose of identifying at a high level the essence of the content and the changes in the topics to which the video refers. Thus, for example, the system is able to build separate episodes of video clips that relate to different parts of the lecture or presentation, in a way that until now required viewing by the human eye and manual activity.

With the help of the service, you can get insights about the audience. These are produced using a combination of IBM's video technologies in the cloud and the platform for extracting insights from media content, using Watson's programming interfaces. This, in order to help identify the viewers' preferences, with the help of analyzing their viewing habits on social networks and the posts they upload.

It is also possible to analyze audience reactions at live events, which are increasingly videotaped in real time. The service combines Watson's text-to-speech technology and Alchemy Language application programming interfaces with IBM's video technology, in order to provide information during the event itself. This experimental technology is designed to process the speech in natural language, which is available in the video footage, and analyze it against the content uploaded by the participants of the event to social networks, in order to present a detailed analysis of the audience's response to the live event.

This new ability may be used to refine and adjust the messages presented by speakers on the stage of a certain event even before they get off it. Thus, for example, a company that unveils new products at a multi-participant event will know which of the products or capabilities arouse enthusiasm or skepticism at the very moment of the unveiling, and identify the aspects on which it is right to focus.

The new tools were born in the cloud video applications division, which IBM established in January of this year, combining its R&D capabilities with the tools and knowledge of Clearleap and Ustream, which the blue giant purchased.

2 תגובות

What is the problem? Afraid of competitors?

The article is specific and does not concern the revolution that is happening now (this is popular knowledge, not my opinion) in the field of artificial intelligence.

Since 2012, breakthroughs have been made, some in universities, some in IBM in the development of the Synapse hardware, and some in giant companies GOOGLE, and Alon Musk's openAI. Sensing and inference by algorithm now reach resolutions that exceed that of humans in certain cases. The artificial intelligence distributed by GOOGLE and OPENAI is simply arousing appreciation but also fear. On hardware cards such as NVIDIA's simple gamer graphics accelerators to IBM's Synapse, neural network calculations go from weeks to minutes or less. In the theoretical field, a new science was created, which quickly caught on like wildfire - it came out of Berkeley, Stanford and MIT: it's called deep learning. It contains convolutional neural networks. Everyone agrees that this amazing conclusion is not consciousness yet. But also in this field, the psychiatrist Guglielmo Tononi developed a theory of consciousness based on digital information theory and began to answer questions: when does an algorithm become aware of itself, when does it begin to say - I am an algorithm, I understand. In addition he tried to quantify the degree of understanding of an object. Tononi's work is not yet accepted by the entire scientific community, but it is common to think that it provides some insights. The site misses a bit the revolution that is happening in the field and this is again according to the experts. Not every day a revolution happens. Immediately all the skeptics will start to object. Friends: Just search for deep learning on Google - and you will come to what insights of inference from an image the Google software comes to, what language building from graffiti the software comes to. Even in the university this mathematics is taught 4 years late compared to the USA.