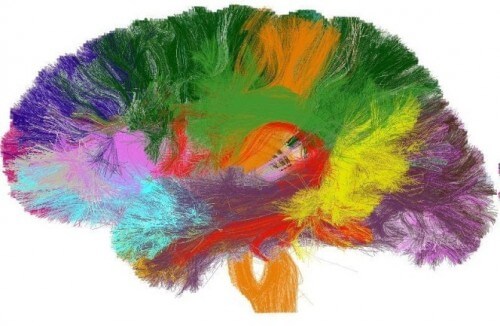

Building comprehensive digital imaging of the brain may change the face of neuroscience and medicine, and reveal new ways to create even more powerful computers

It's time to change the way we study the brain. Reductionist biology, which examines each of the parts of the brain separately and the neural circuits and molecules that operate in it, has brought us very far, but it is unable to explain the way the human brain works - the information processor contained in our skull that may have no equivalent in the entire universe. We must assemble and not just disassemble, build and not just analyze. For this we need a new paradigm, which will combine both the deconstructive analysis and the constructive synthesis. The father of reductionism, the French philosopher René Descartes, wrote about the need to study the parts and then put them back together to recreate the whole.

Putting things together to create a complete computer simulation of the human brain is the goal of a project that is trying to build a fantastic new scientific tool. Nothing like this has ever been built, but we have begun the task. One way to think of this tool is as the most powerful flight simulator ever built, except instead of simulating flight through the air, it will simulate a journey inside the mind. This "virtual brain" will run on supercomputers and integrate all the data that neuroscience has produced and will produce until that moment.

Digital Brain will serve as a resource for the entire scientific community. Researchers will book an appointment in advance to run experiments on it as they do today with the largest telescopes. They will use it to test theories about how the healthy and sick human brain works. They will mobilize imaging not only to develop diagnoses for autism and schizophrenia, but also to develop new treatments for depression and Alzheimer's disease. The wiring of tens of trillions of neural circuits will inspire the design of brain-like computers and intelligent robots. In short, it will be a revolution in neuroscience, medicine and information technology.

A brain in a box

Scientists will be able to run the first simulations of the human brain at the end of this decade, when the supercomputers will be powerful enough to perform the enormous amount of calculations required for this. It is not necessary to decipher in advance all the mysteries of the brain to build this device. Instead, the device itself will create a framework into which we will integrate what we already know, and which will allow us to predict by calculation what we do not know. These predictions will show us where we should direct future experiments to avoid wasting work. The knowledge that will be accumulated will be integrated with the existing knowledge, and the "holes" will be filled with details that will be closer and closer to reality, until we finally get a unified model of the brain, which will accurately reproduce it from the level of the entire brain to the level of the molecules.

Building this tool is the goal of the Human Brain Project (HBP), an initiative that includes about 130 universities around the world. It is one of six projects competing for a prestigious prize that may reach one billion euros, which the European Union will grant over ten years to each of the two winners, to be announced in February 2013.

We need this imaging computer for at least two reasons. In Europe alone, every third person suffers from a brain disease, and in total about 180 million people - and the number will increase as the population ages. Apart from that, the pharmaceutical companies do not invest in new treatments for the diseased nervous systems. A holistic view of the brain will allow us to reclassify these diseases in biological terms, rather than as collections of symptoms. With the help of the new wide angle of view, we will be able to move forward and develop a new generation of treatments, which will focus on the vulnerabilities that are at the root of the diseases.

The second reason is that the world of computers is getting closer to barriers that will prevent further development. Today's computers are unable to perform many tasks that animal brains perform without difficulty, despite the constant increase in processing power. For example, although computer scientists have made great progress in the field of visual recognition, machines still have difficulty extracting information from the contexts appearing in the image, or using random pieces of information to predict future events as the brain is able to do.

Furthermore, as more powerful computers require more energy, we are approaching the point where supplying the demand will no longer be practical. The performance of today's supercomputers is measured in units of petaflops (petaflops) - million-billion, or 1015, logical operations every second. The next generation, which is expected to appear around 2020, will be a thousand times faster and will be measured in exa-flops - billions of billions of operations per second. The first computer of this scale will probably consume about 20 megawatts of electricity, about as much as a small city in winter. If we want to create more powerful computers that can do some of the simple but useful things that humans are capable of doing while saving energy, we will need a revolutionary new strategy.

The human brain, on the other hand, uses only about 20 watts, like a small lamp, to perform a variety of intelligent operations - a millionth of the energy that an Exaflop computer would consume. It is therefore logical to try to imitate its mode of operation. But for that we need to understand the multi-level organization of the brain, from genes to behavior. The knowledge is already in different places, but we need to unify it. And our device will serve as a platform on which we can do this.

Critics say our goal of brain modeling is unachievable. One of their main claims is that it is impossible to reconstruct the connections between the hundred trillion synapses in the brain, because we cannot measure them. Indeed, we have no ability to measure the network of connections, but we also have no intention of trying to do so, or at least not all of it. We intend to restore the variety of connections between brain cells by other means.

The key to our approach is to reconstruct the basic plan according to which the brain is built: the set of rules that guided its creation throughout evolution, and which does so anew in each developing embryo. In theory, these rules are all we need to start building a brain. The skeptics are right: the resulting complexity is enormous, hence our need for supercomputers to contain it, but discovering the rules themselves is a much more practical problem, and if we solve it, there is no reason why we won't be able to apply them just as biology does and build a brain in a virtual "test tube".

The type of rules we are talking about are the ones that control the genes that create the different brain cells, and the programs that determine the arrangement of the cells throughout the brain and the connections between them. We know such rules exist, because we discovered some of them while preparing the infrastructure for HBP. We started this almost twenty years ago, when we measured the properties of single nerve cells. We collected huge amounts of data on the geometric properties of different types of nerve cells, and reproduced hundreds of them on the computer in XNUMXD simulations. We also recorded the electrical properties of the nerve cells using a arduous method called "Patch Clamping", which involves attaching a microscopic tip of a thin glass pipette to the cell membrane in order to measure the voltage in the ion channels present in it.

In 2005, it took a powerful computer and a three-year PhD thesis to build a computer model of a single neuron. However, we knew that more ambitious goals would soon become feasible, and that we could build models of larger parts of the brain even if we didn't know everything about them. At the Brain Mind Institute at the Swiss Federal Institute of Technology we built one of the early versions of HBP, a project called Blue Brain. The idea was to build "unifying computer models" that would combine all the existing theories and data regarding a certain part of the brain and try to reconcile in these models between conflicting data and highlighting the points where we lack information.

Biology of synthesis

To test the unifying model idea, we decided to build such a model of a brain structure of the cerebral cortex called a cortical column. These columns are the brain equivalent of the processor in a personal computer. To use a crude metaphor, if we were to insert into the cerebral cortex a tiny device that acts like an apple corer and pull out a cylinder of brain tissue half a millimeter in diameter and one and a half millimeters high, we would get a column. Inside this tissue is a very dense network of tens of thousands of cells. This design of an information processing component is so efficient that once evolution arrived at it, it kept using it over and over again until there was no room left in the skull, and the shell had to fold in on itself to create more space. This is the reason for the convolutions on the surface of our brain.

The column crosses the six vertical layers of the neocortex, the outer layer of the cerebral cortex, and the neural connections between it and the rest of the brain, which are organized differently in each layer. The organization of these connections is reminiscent of how telephone calls are assigned numbers and routed through a telephone exchange. Inside each column there are nerve cells of several hundred types. With the help of our supercomputer, IBM's Blue Gene, we combined all the available information about how these types fit into each layer, until we arrived at a "recipe" for creating a column like the one found in the brain of a newborn rat pup. We instructed the computer to allow the virtual neurons to connect in all the ways that real cells connect - and only in those ways. It took us three years to create the appropriate software, which in turn allowed us to create the first unifying model of a column. This was the first demonstration of the ability of what we call "synthesis biology" - imaging the brain using the complex of biological knowledge, and using this imaging as a new and practical way to conduct research.

The model we built back then was a static model, analogous to a brain column in a coma. To find out if she would start behaving like a real living column (albeit one that was cut off from the surrounding brain tissue), we gave her a "push" - an external stimulus. In 2008 we sent a virtual electrical signal to the virtual column. While we were watching the nerve cells started talking to each other. Action potentials ("electrical "jumps"), which make up the language in which the brain works, spread throughout the column that began to act as an integrated circuit. The potentials flowed back and forth between the layers, just like in pieces of a living brain. Such behavior was not pre-programmed in the model: it emerged spontaneously, due to the way the circuit was built. Furthermore, the circuit remained active even after the stimulation was stopped, and over a short period of time it developed its own internal dynamics - its own way of representing information.

Since then, we have gradually incorporated additional information, coming from laboratories around the world, into this unifying model of the column. The software we developed is also updated frequently, so that every week we recreate the column, it includes more data and more rules and becomes more accurate. The next step is to combine the data from an entire brain region, and then from an entire brain. In the first stage, it will be the brain of a rodent.

Our attempts in the field will largely rely on the field called neuroinformatics. Vast amounts of brain-related data coming from laboratories all over the world will need to be intelligently integrated. We will then have to look for patterns or rules in this data that describe how the brain is organized. We must translate the biological processes described in these rules into collections of mathematical equations, while developing the software that will allow us to solve the equations using supercomputers. We will also need to write software that will build a brain that is compatible with biology. We call this software "The Brain Builder".

The predictions of neuroinformatics about how the brain works, which are constantly updated thanks to the new data, will speed up the understanding of the brain's work without having to measure every aspect of it. We can make predictions based on the rules we discover, then test them in the real world. One of our goals is to use knowledge about genes that make proteins in certain types of nerve cells, to predict the structure of these cells and their behavior. We call this direct link, between genes and the nerve cells themselves, the "informatics bridge", a shortcut of the type that the biology of synthesis provides.

Another informatics bridge that scientists have been using for years is related to genetic mutations and their link to disease. More specifically, how mutations change the proteins that cells produce. These proteins in turn affect the geometry and electrical properties of the nerve cells, the synapses they form and the electrical activity that is generated in the local microcircuits before it spreads in a wide wave across entire brain regions.

For example, theoretically we can program a particular mutation in the computer model and see how it affects it in every link of the biological chain. If the symptom (or collection of symptoms) that appears matches what we see in the real world, the virtual chain of events becomes a candidate to be the mechanism that causes the disease, and we can even begin to look for possible targets for treatment along it.

This is a very cyclical process. We combine all the data that can be obtained, program the model so that it obeys certain biological laws, run a simulation and compare the "output", meaning the behavior of the proteins, cells and circuits, to the corresponding experimental data. If there is no match, we go back, check the accuracy of the data and adjust the biological rules more precisely. On the other hand, if there is a match, we bring more data and add more and more details while expanding the model to a larger area of the brain. As the software improves, the data integration becomes automatic and fast, and the model behaves more and more like the real biological brain. Creating a model of the entire brain, even though the information we have about synapses and cells is still incomplete, therefore no longer seems like an impossible dream.

To feed the project we need data, and a lot of it. There are ethical considerations that limit the experiments that neuroscientists can perform on the human brain, but fortunately, the brains of all mammals are built according to the same rules (with slight variations between species). Most of what we know about the genetics of the mammalian brain comes from mice, while from monkeys we have received valuable insights into cognition. Therefore, we can start building a unified model of a rodent's brain, and use it as a starting template from which to gradually open, detail by detail, the model of the human brain. In fact, the mouse, rat and human brain models will be developed at the same time.

The data collected by the scientists will help us identify the rules that govern the organization of the brain, and verify through experiments that our predictions - the cause-and-effect chains that the model suggests - correspond to the biological truth. For example, at the cognitive level, we know that very young infants have some grasp of the numerical concepts 1, 2, and 3, but no more than that. When we succeed in simulating a baby's brain, the model must be identical to the baby both in what it is able to do - and in what it is not.

Credit: Emily Cooper

Many of the data we need are already known, but it is difficult to get to them. Collecting and organizing the data is one of the main challenges of HBP. Data from the field of medicine, for example, will be of enormous value to us, not only because impairments in function show the normal function, but also because any model we produce must behave like a healthy brain - and suffer from diseases just as a real brain might suffer from them. Therefore, brain scans of patients will be an important source of information.

Today, every time a patient undergoes a brain scan, the results are stored in a digital archive. The hospitals have millions of scans, which are indeed used for research purposes, but this research progresses at such a slow pace that the resource remains mostly unused. If we can upload all the scans to a "cloud" accessible on the Internet, together with the historical records and the biochemical and genetic information, doctors will be able to search for patterns in large populations for the purpose of identifying diseases. The strength of this strategy stems from the ability to isolate, mathematically, the differences and similarities between all diseases. A cross-university collaboration called the Alzheimer's Disease Neuroimaging Initiative is trying to do just that, by collecting brain scans, cerebrospinal fluid and blood tests from a large number of dementia patients, and from healthy control participants.

The future of computing

Another issue, and no less important, is computing. The newest Blue Gene computer is a monster on the scale of peta-flops, with about 300,000 processors crammed into a volume of about 72 domestic refrigerators. Such computational power is enough to simulate a rat brain containing 200 million cells at a cellular level of detail, but not a human brain of 89 billion cells. For this we will need a computer with the power of exaflops, and even then, imaging the human brain at the molecular level will remain beyond our reach.

Around the world there is a competition between teams trying to build such computers. When they succeed, as happened with previous generations of supercomputers, it is likely that the computers will initially be used to simulate physical processes such as those in nuclear physics. Biological imaging has other requirements, and with the help of major computer manufacturers and other industry partners, our team, which includes high-performance computing experts, will adapt such a computer to the task of brain imaging. They will also develop the software that will allow us to build unifying models from the lowest to the highest level of resolution, so that we can focus our imaging on molecules, cells or the whole brain.

When the brain simulation is completed, the researchers will be able to perform experiments on the programmed "subject" as they would on the biological one - with some important differences. To understand what this is all about, think about the way scientists are currently looking for the causes of a certain disease using mice devoid of certain genes ("knockout mice"). The researchers must euthanize these mice - a lengthy, expensive and not always possible process (if, for example, silencing the gene kills the embryo), and this is even before we take into account the ethical considerations associated with animal experiments.

In the computerized brain they will be able to silence a virtual gene and observe the results on "human" brains of different ages operating in different modes of action. They will be able to repeat the experiment under any conditions they want with the exact same model, which ensures a thoroughness that is unattainable in animals. This will not only speed up the process of identifying potential drugs by medical researchers, but will also change the way clinical trials are conducted. It will be much easier to choose a target population, and the screening of ineffective drugs, or drugs that cause intolerable side effects, will be faster. As a result, the research and development chain will be much faster and more efficient.

The things we learn from the simulations will come back and be integrated into the field of computer design as well. If we know how evolution created a durable brain, capable of quickly performing many parallel operations on a huge scale, with a huge memory volume and energy consumption like that of an electric light bulb, we can build more efficient computers.

Brain-like computer chips will be used to build "neuromorphic" (neuron-like) computers. The HBP project will print brain circuits on silicon chips, based on technologies developed in the European Union's BrainScaleS and SpiNNaker projects.

The first whole-brain simulations we run on our devices will lack a very basic characteristic of the human brain: they will not develop as a child does. From the moment of birth onwards, the cerebral cortex develops as a result of growth, migration and "pruning" of nerve cells, and as a result of a process that is highly dependent on experiences and known as "plasticity". Instead, our models will skip years of development, start at some arbitrary age, and gain experience from that moment. For this we will have to build the mechanism that will allow the model to change in response to input from the environment.

The decisive test of our virtual brain will occur when we connect it to software that represents a body and place it in a realistic virtual environment. The virtual brain will then be able to receive information from the environment and act on it. Only if we reach such an achievement can we teach the brain different skills and check if it is indeed intelligent. Since we know that the human brain has redundancy – neural systems that support each other – we can begin to discover which aspects of brain function are essential for intelligent behavior.

The HBP project raises important ethical issues. Even if a tool that simulates the human brain is still far from our reach, it is legitimate to ask whether it would be a responsible act to build a virtual brain that would contain more cortical columns than the human brain, or a brain that would combine human-like intelligence with an ability to calculate numbers a million times greater than that of Deep Blue, the computer of IBM for the game of chess.

We are not the only ones setting a high bar in trying to reunite the fragmented field of brain research. In May 2010, the Allen Institute for Neuroscience in Seattle launched the Allen Atlas of the Human Brain, which aims to map all active genes in human brains.

The main obstacle holding back any research group working in the field is, apparently, funding. In our case, we can only achieve the goal if we get the financial support we need. Supercomputers are expensive, and the final cost of HBP is expected to match or exceed that of the Human Genome Project. In February 2013 we will know if we have received a "green light", and in the meantime we are making as much progress as possible in the project which, in our opinion, will give us unprecedented insights into our identity as beings capable of reflecting on the effects of light and shadow in Caravaggio's painting, or the paradoxes of quantum physics.

____________________________________________________________________________________________________________________________________________________________________

About the author

Henry Markram (Markram) Manages the Blue Brain project at the Swiss Federal Institute of Technology in Bloisan. He carried out many studies on the connection, the communication and the learning of nerve cells. He also discovered basic principles of brain plasticity and was involved in the development of the "unbearable world" theory of autism and the theory of the brain's computational operation as a constantly stirring fluid.

And more on the subject

Links to websites related to the human brain project:

The Human Brain Project: www.humanbrainproject.eu

BrainScalS: http://brainscales.kip.uni-heidelberg.de

SpiNNaker: http://apt.cs.man.ac.uk/projects/SpiNNake

8 תגובות

I hope that nowadays we can connect the brain to a USB to get information, instead of going to school.

But, then we will expose ourselves to new dangers like brain viruses - and we will have to back up our brains from time to time.

In the worst case, we will format the brain and reinstall Windows 😉

Simmons diet

48

The brain = Ha, Mem, Hit = A real animal.

If you mentioned Kurzweil, a translation of his book - "The Singularity is Approaching" - was just released:

http://www.magnespress.co.il/pdf_files/upload/45-400135.pdf

I recommend the first lecture.

exciting.

Although Ray Kurzweil's vision always sounded impossible to me, it certainly seems more possible now.

http://www.youtube.com/watch?v=tD3plxVNFJc

http://www.kurzweilai.net/henry-markram-simulating-the-brain-next-decisive-years