Babies quickly learn to control order in the world around them; In fact, their ability to perceive - already at a very young age - far exceeds that of an intelligent computerized system. The question is, how do babies create their image of the world

Babies quickly learn to control order in the world around them; In fact, their ability to perceive - already at a very young age - far exceeds that of an intelligent computerized system. The question, how babies create their image of the world, is a challenge for researchers in the fields of cognitive psychology and computer science alike. The babies, on the one hand, cannot explain to researchers how they learn to understand their environment. Computers, on the other hand, despite their great sophistication, still need human help to classify and identify objects in order to study them. Many scientists believe that this is exactly where the dog is buried: in order for computers to "see" the world as we see it, they must learn to classify and identify objects - very similar to how babies do it.

Prof. Shimon Ullman, and research students Daniel Harari and Nimrod Dorfman, from the department of computer science and applied mathematics at the institute, set out to investigate the learning strategies of babies, and created a computer model based on the principles according to which babies look at the world. Their research focused on hands. Babies learn within a few months to distinguish between their hands and other objects or other body parts, even though the hand is a very complex organ: it may appear in a variety of sizes and shapes, and move in different ways. The team of scientists created an algorithm for recognizing hands, in order to test whether the computer can learn to recognize hands independently from videos, even when the hands appear in different shapes, or are seen from different angles. The algorithm did not tell the computer directly "this is a hand", but was required to find out, while watching the movies, what the characteristics of hands are.

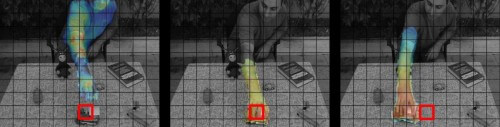

The algorithm was based on several insights about the stimuli that attract the attention of babies. For example, babies are able to follow movement from the moment they open their eyes, and this movement may "point" to certain objects that they should choose from the environment. The scientists asked whether certain types of movement might be more meaningful to infants than others, and whether these types could provide the information needed to form a visual concept. For example, a hand creates a change in the baby's field of vision because it usually moves objects. Eventually the baby learns to generalize, and link the event of an object moving another object and the appearance of a hand. The scientists called this action a "sliding event".

After designing an algorithm that learns to recognize moving events, the scientists "showed" the computer a series of videos. Some of them showed moving events: hands that change the position of various objects. Other videos were filmed from the point of view of a baby, observing the movement of his own hands, and other videos showed other hand movements, which are not involved in moving objects, or general patterns such as people and different body parts. The results of these experiments clearly showed that moving events are enough to teach the computer to recognize hands, and that this method is much more effective than all other methods - including watching the viewer's own hands.

ן

This did not complete the model. Through the moving events, the computer was indeed able to learn to recognize hands, but it still had difficulty when it encountered different positions of them. Once again, the scientists looked for the solution in the principles that guide the perception of babies: they can not only recognize the movement, but also follow it. In addition, they pay a lot of attention to the face. When they added to the algorithm a mechanism that allows tracking the identified hands - and thus learning their possible positions, as well as using the face and body as a reference point for hand placement, the learning process improved considerably.

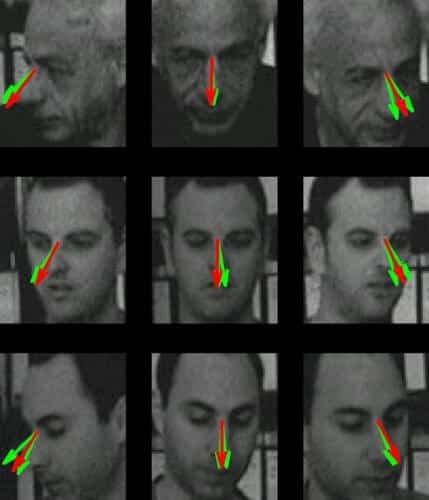

In the next phase of the study, the scientists looked at another principle of infant learning, which computers have difficulty grasping - determining what another person is looking at. The scientists based on the two insights they used before - that sliding events are essential, and that babies show interest in faces, and added a third insight: when people hold an object in their hands, they look in the direction of the hands. Based on these three principles, the scientists created another algorithm, and tested the idea that babies learn to recognize the direction of gaze through a link between faces and moving events. Indeed, the computer was able to learn to follow the direction of gaze with a degree of success comparable to that of an adult human.

The scientists believe that the models they created show that babies are born with pre-wired programs in their brains, such as preferences for certain types of movement or visual signals. They refer to these programs as an "early concept", a kind of pre-prepared building blocks, with which the babies can begin to assemble and understand the picture of the world. Thus, for example, the basic "early concept" of sliding events can develop into an understanding of concepts such as hands or gaze direction, and eventually lead to the perception of even more complex ideas, such as people, objects, and the relationships between them.

This research is part of a wider scientific effort, known as the "Digital Baby Project". "The idea is to create computer models for very early cognitive processes," says Daniel Harari. Nimrod Dorfman adds: "On the one hand, such theories can shed light on human cognitive development. On the other hand, they will promote the development of smart technologies such as computer vision, learning machines, robots, etc.

One response

And why would we want learning machines in our lives... this machine is a machine for work if you give them the possibility to think and love it goes a long way. them from the sense of a machine to something else. And not necessarily good.