We are used to recognizing faces without giving it a second thought, but the brain mechanism that makes this possible remains a mystery. In a new study published today in the scientific journal Nature Communications, Weizmann Institute of Science scientists shed new light on this human ability. The researchers, from Prof. Rafi Malach's laboratory in the Department of Neurobiology, found a distinct similarity between the way faces are coded in the human brain and the way they are coded in artificial intelligence systems called "deep neural networks".

We are used to recognizing faces without giving it a second thought, but the brain mechanism that makes this possible remains a mystery. In a new study published today in the scientific journal Nature Communications, Weizmann Institute of Science scientists shed new light on this human ability. The researchers, from Prof. Rafi Malach's laboratory in the Department of Neurobiology, found a distinct similarity between the way faces are coded in the human brain and the way they are coded in artificial intelligence systems called "deep neural networks".

When we see a face, dedicated neurons in the visual cortex that respond to faces but not to other objects are activated and "fire" their signals. But how does the activation of individual neurons ultimately translate into facial recognition? Prof. Malach and research student Shani Grossman proposed to examine this question through a comparison between the activity of the human brain and the activity of deep neural networks. These computerized systems, which revolutionized the field of artificial intelligence, are trained to perform various tasks based on large databases. In recent years these systems have improved so dramatically that they are now able to perform identification tasks with a degree of success comparable to humans and even better than them, especially in facial recognition.

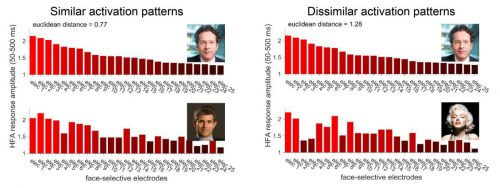

Grossman and Guy Gaziv, a research student in the Department of Computer Science and Applied Mathematics, analyzed data collected from a unique group of subjects consisting of epilepsy patients whose brains had electrodes inserted for medical diagnosis in the laboratory of Dr. Ashesh Mehta at the Feinstein Institute for Medical Research in New York. During their hospitalization for this purpose, the patients volunteered to perform various research tasks. These subjects were shown faces from different image pools, including portraits of celebrities, and their brain activity was measured from a region of the brain responsible for visual perception of faces. The scientists discovered that each of the images presented to the subjects led to a unique pattern of nerve cell activation, that is, the activation of different groups of nerve cells at different intensities. The interesting thing is that there were pairs of faces that led to similar patterns of brain activity - that is, they had similar "signatures" in terms of brain activity - and there were those that led to very different patterns of activity.

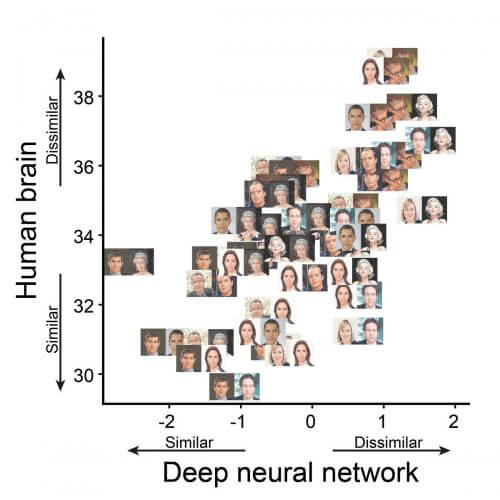

The scientists wanted to know if the signature patterns they discovered play an important role in our ability to recognize faces. To do this, they compared the way humans recognize faces to the way a deep neural network with a recognition ability similar to that of humans does so. This network was inspired by the human visual system, and contains artificial components that correspond to nerve cells. In order to perform facial recognition, the artificial neurons, which are organized hierarchically in more than 20 "layers", process various facial features - from the simplest, such as basic lines and shapes, to more complex ones, such as eye parts or other facial parts, to the most accurate, such as the identity of a certain person The scientists hypothesized that to the extent that the coding patterns they found in the brain are indeed essential for the ability to recognize faces, similar "signatures" may also be found in the artificial system. To test this, they presented the system with the same images they showed the volunteers. Then they checked if the images led to unique activation patterns with a similar structure to those discovered in the volunteers.

The scientists discovered that there is indeed a distinct similarity between the identification systems of humans and the artificial network. The similarity was limited only to the middle layers of the network - those that encode the appearance of the face itself and not the more abstract identity of the owner of the face. "You can learn a lot from the fact that two such different systems - the biological one and the artificial one - have developed similar characteristics," says Prof. Malach. "I would call it convergent evolution - just like the man-made wings of airplanes are similar to the wings of insects, birds and even mammals. This convergence points to the importance of unique coding patterns in facial recognition."

"Our findings support the theory that unique activation patterns of neurons in response to different faces, and the relationship between these patterns, play a key role in the way the brain recognizes faces," says Grossman. "These findings may, on the one hand, advance the understanding of how the perception of faces and their recognition are coded in the human brain, and on the other hand, help improve the performance of artificial networks through their adaptation to the brain's response patterns."

Michal Harel from the Department of Neurobiology, Prof. Michal Irani from the Department of Computer Science and Applied Mathematics and members of Dr. Mehta's group at the Feinstein Institute for Medical Research in the United States also participated in the study.

One response

Surely a facial recognition system designed according to the operation of the brain will work similarly to the brain!!!