The system, which includes 11 million images and their contexts in 108 languages, is intended for artificial intelligence training. The data series is available to the public and Google will even hold a WIT-based application competition together with the Wikimedia Foundation and the KEGGLE website

Google celebrates its 23rd anniversary today. Google AI, one of the company's youngest divisions announced WIT: a data series linking images, texts and context from Wikipedia open to the general public for artificial intelligence training.

Krishna Srinivasan, Software Engineer and Karthik Raman, Research Scientist, Google Research released the details of Google AI's announcement of WIT - a huge series of images from Wikipedia and their matching to text in many languages - for artificial intelligence training.

On their blog on the Google AI website The two write: "Modern models of images and their description in rich multilingual texts can help understand the relationship between images and text."

“Traditionally, these datasets were created by manually captioning images, or scanning the web and extracting the alt text as image captions. While the former approach allows for higher quality data to be generated, the intensive manual interpretation process limits the amount of data that can be generated. On the other hand, the automated extraction approach can lead to larger data sets, but these require heuristics and careful filtering to ensure data quality or run rescaling models to achieve robust performance. Another shortcoming of the existing data sets is the lack of coverage in languages other than English. The abundance naturally led us to ask: Is it possible to overcome these limitations and create a high-quality, large, multilingual data set with a variety of content?"

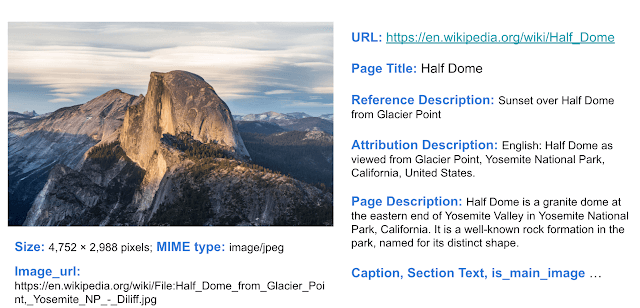

"Today we present the Wikipedia-based Texts and Images (WIT) dataset, created by extracting multiple texts in image descriptions from Wikipedia articles and image links in Wikimedia. We conducted strict filtering that ensured that only high-quality text-image sets were scanned. As detailed in “WIT: A Wikipedia-Based Image-Text Dataset for Multilingual Multilingual Multidimensional Multidimensional Machine Learning,” presented at SIGIR '21, the result was a repository of 37.5 million rich text and image samples of which 11.5 million Unique photos and their descriptions in 108 languages. The WIT dataset is available for download and use under a Creative Commons license.”

The unique advantages of the WIT dataset are:

- Size: WIT is the largest multimodal dataset of text-image examples publicly available.

- Multilingualism: 108 languages, WIT has 10 more languages than any other dataset.

- Contextual information: Unlike typical multimodal datasets, which have only one caption per image, WIT includes information that includes page-level and section-level contexts.

- Real-world entities: Wikipedia, being a broad knowledge base, is rich in real-world entities represented in WIT.

- Challenging test set: In our recent works received at EMNLP, all state-of-the-art models exhibited significantly lower performance on WIT compared to traditional test sets (e.g., about 30 points drop in memory).

A quality training kit and a challenging evaluation index

The broad coverage of diverse concepts in Wikipedia means that WIT's evaluation systems serve as a challenging benchmark, even for state-of-the-art models. We found that The mean recall scores of traditional data sets were around 80 percent, while the WIT test set gave results around 40 percent in the well-resourced languages and around 30 for the under-resourced languages. We hope this in turn can help researchers build stronger and more robust models.

WIT dataset and competition with Wikimedia and Kegel

In addition, we are pleased to announce that we are collaborating with Wikimedia Research and some external collaborators to organize a contest with a kit The WIT tests. We are hosting this contest in Kagel The competition is a picture text retrieval task. Given a set of images and text captions, the task is to retrieve the appropriate captions for each image.

To enable research in this area, Wikipedia has made available images with a resolution of 300 pixels and Resnet-50-based image rings for the training scope of the database test. Kaggle will host all the image data in addition to the WIT dataset itself. Furthermore, competitors will have access to a Kaggle discussion forum to share code and collaborate. It allows anyone interested in modeling to start and run experiments easily. We are excited and looking forward to what the WIT dataset and Wikipedia images will create on the Kaggle platform.

For any questions, please contact wit-dataset@google.com. We'd love to hear how you use the WIT dataset.” The researchers conclude.

Link to the data series on Github

More of the topic in Hayadan:

- The MND company is expanding and recruiting new employees in preparation for entering the world market with its first product

- NASA is preparing for sex in space

- The flu virus circulates around the world in the summer and mixes with other strains of viruses

- The team of science students from the "Rabin" school in Nesher is the winner of the inter-school robotics competition in the tug-of-war and running competition

- Research: Billboards with little text are more dangerous than busy ones