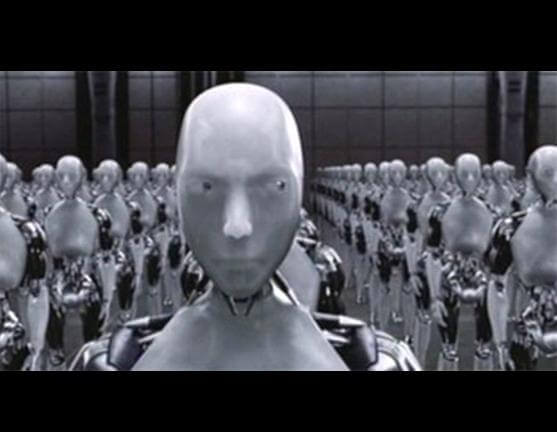

In the future, engineers will build a being with intelligence at a level capable of competing with human intelligence and even surpassing it, so that it can achieve what it wants. Therefore, it is essential that its goals benefit humanity. How can this be ensured?

Joshua Fox Galileo

There are those who doubt the claim that human-level intelligence is possible in the coming decades. Douglas Hofstadter, author of the book "Gadel, Asher, Bach" claims that it will take hundreds of years before we reach this milestone. Hofstadter sees in the human mind special qualities that are not possible in an artificial being: he sees in us, human beings, deep, complicated, and even mysterious qualities.

Let's talk about it

On Thursday, May 24.5.2012, 21, Yehoshua Fox will be hosted at the Science and Society Forum and will talk to the surfers about artificial intelligence. The discussion will take place at 00:19-00:XNUMX

The human brain is indeed more complicated than any other object known to us in the universe, but fundamentally it is nothing more than a machine based on proteins and built from nerve cells. As a physical entity, there is nothing in it that cannot, in principle, be duplicated, even if this duplication is difficult from a practical point of view. But duplicating the exact operation of the human brain is not the main thing.

If our concern is the impact of future artificial intelligence on our world, then its ability to behave humanely is a secondary concern. Alan Turing, the founder of the field of artificial intelligence, understood this from the beginning: in the same article describing an intelligence test (see Galileo, January 2012 issue, dedicated to Alan Turing), in which a computer is tested for its ability to imitate humans, Turing said that successful imitation is only true- A convenient measure for identifying intelligence in the absence of more accurate measures; But a more correct definition would focus on the entity's abilities to achieve desired goals in general, and not just the imitation task.

A correct presentation of intelligence is its definition as the ability to achieve diverse goals in diverse environments, with an emphasis on goals that affect us and the environments that concern us. Mathematically, intelligence is an optimization force in the broadest sense, i.e. a maximization of any utility functions, functions of the state of the world. Such a conception of intelligence is the right way to view the horizon of the possibilities before us in the future: there may be non-human intelligences that are not necessarily similar to us. This will be especially true for intelligences based not on imitating brain activity, but on algorithms and data structures designed and developed specifically for this purpose, a method known as "Artificial General Intelligence".

All humans have a fairly similar mental structure, this is due to our evolutionary background. The fusion of genes in sexual reproduction does not allow for great differences between the members of the same population. It may seem to us that we are surrounded by cognitive diversity among different people, but we must remember that none of us has seen intelligence on a human level that is not human. When we try to imagine the behavior of another intelligence, we must give up our intuitions on the subject. The focus on "optimization" and not on the common term "intelligence" helps us clarify the core of the concept from the hidden assumptions based on our experience with humans.

Artificial General Intelligence

In this article I will focus not on future brain imaging, but on general intelligence with artificial architecture. Two reasons for this: First, the characteristics of brain imitations are understandable to us, because we know the behavior of humans. However, general intelligence based on theoretical foundations opens up many more possibilities that are worth exploring. Second, the artificial general intelligence method has advantages that accelerate its development in the race to build general intelligence, compared to complicated and difficult to understand biological structures. For example, the first airplane was built on aerodynamic foundations with general inspiration from birds, and not as an exact imitation of a bird. Is it possible for engineers to build in the coming decades a non-human intelligence, whether it will be brain-like or based on theoretical foundations? According to certain forecasts (and see: Kurzwil, for further reading) the goal will be reached by 2030, and there are trends that indicate such a possibility.

The computational power that $1,000 (index-linked) can buy increases exponentially over the years and the algorithms are also improving. For certain tasks in narrow artificial intelligence (the one known today), the improvement of the algorithms contributes to the acceleration of the calculation speed more than the improvements in the hardware. On top of that, scientists are learning how the brain works: brain scanning technologies are also improving exponentially over the years, and today neurologists know a lot about the function of certain parts of the brain, although the mechanism that builds thoughts from electrical processes in nerve cells is still not understood. All this data suggests that non-human general intelligence will be built within a few decades.

But the acceleration of technology is neither sufficient nor necessary for the creation of non-human general intelligence. To build such an entity, scientific breakthroughs will be required. These breakthroughs depend on the brilliance and hard work of a handful of scientists and engineers, and not just on the mindset of the scientific world. It is impossible to predict when these breakthroughs will arrive, but whether they arrive in a few years or whether they are delayed for hundreds of years, it is imperative that we engage in the leap from the level of human intelligence to the level of non-human intelligence, because of its decisive consequences.

The definition of intelligence as the ability to achieve goals seems far-fetched in light of the human dogma. After all, human beings are driven by a combination of many desires, inextricable to consciousness, and frequently changing. This situation is due to the fact that our goals were created as a tool for the "goal" of evolution, namely the multiplication of genes - therefore humans strive to eat, gain social status, mate, survive and more. Of course, humans do not consciously serve evolution; On top of that, due to the changes in our environment in the last thousands of years, the goals of humans have already exceeded the goals of evolution - this can be seen, for example, in contraceptives and obesity.

It is not surprising that the complicated tangle of desires, which today no longer has a unified direction, works in a clumsy and unclear way. However, as complicated as this mixture of desires is, it still constitutes a set of goals: human beings do not act randomly, but strive in certain directions, which are very similar in all human beings.

The goals of artificial intelligence

Artificial intelligence may also aspire to a complicated and complicated mix of goals, especially if it is built on the model of human intelligence. But if built by engineers who want to solve a specific problem, the AI's goal set may be simpler, such as "maximize the money in my investment account," "defeat our enemies in war," or "find a way to prevent cancer."

She realized that one day she would want to improve her abilities. This is because improvement can contribute to the achievement of the basic goals of the entity, and as we have defined, the entity will be built so that it will do everything in its power to pursue the goal in the most efficient way. Chances are she will be able to do it too. Artificial intelligence based on chips will be able to add more chips, more memory, more computers for parallel computation; or analyze its source code and rewrite it. An inhuman benevolent being will be able to build copies of itself, and also build beings of a new generation, which are only similar to it in that they contribute to the achievement of the same goal. All of these are techniques for achieving goals that are impossible for humans - we can gain knowledge and learn skills for self-improvement, but not change the structure of our mind. And when we, the humans, set up a new generation, it does not work to accurately realize all our desires!

Under the assumption that engineers will bring intelligence to a human level, there is no reason for the improvement to end precisely at this arbitrary point. Humans are endowed with the minimal level of intelligence capable of creating a civilization. There is nothing unique at this level; It is not necessarily a necessary stopping point. Each round of improvement will add more abilities until the understanding reaches levels far beyond ours.

Binat-ul has a high chance of achieving its goals. If we want her to act for the benefit of humanity and not to our detriment, she should want to. We will not be able to stop Bina, who is wiser than us, not to imprison her in a computer, nor to dictate rules to her that will limit her behavior. She will easily bypass, if she wants to, any trick we can think of.

It is very difficult to define goals that will benefit humanity. Our system of desires is diverse, and the failure to fulfill a small part of this mixture will spell disaster for humanity. As a thought experiment, we can bring up an example of a superintelligence striving for the goal of "peace on the world", a lofty human goal. And here, she can do it effectively… by the instant extinction of the human race (a very undesirable outcome!). If its goal is to maximize the quality of love for others in the world, it can accomplish this by changing our minds and taking on mental abilities, so that we feel nothing but warm love, like the love of a rabbit for its cubs, and in the process also take away from us the initiative and ambition, which sometimes also bring with them strife and discord .

We perceive goals like "peace" and "love" with strong and positive emotions, so it is problematic to concentrate on such thought experiments. However, emotionless goals also endanger the future of the human race. The standard example is paper clips. A powerful optimizing entity with the goal of "increasing as much as possible the amount of clamps you have", will take various measures to increase the amount of clamps. Improving self-efficacy will help her in her mission. When you reach a superhuman level, much higher than our level, we humans will no longer be able to influence one way or the other on achieving the goal. Converting all of Earth's matter into staples will aid her in her goal.

The consumption of all the resources essential to us, such as the material of the earth, will lead to the extinction of the human race. This action may not seem wise: it seems more suited to super stupidity than to super intelligence. But as mentioned, we are dealing with intelligence not in the human sense, but with optimizers, entities that maximize a certain utility function. The "amount of staples" function, and in fact most possible utility functions, are not good for humans.

The danger to the human race is not the rebellion of the artificial intelligence: the desire to unburden the yoke is a human desire that stems from our evolutionary background - and it is indeed useful for achieving other goals, but the engineers of the future do not have to include it in the system of goals and impulses of the artificial intelligence, and it must not be created unless it has a benefit . The possible damage is a secondary result of successful optimization in favor of goals that we set for artificial intelligence, if we do not adhere to a set of goals that includes everything that is really important to us (and see for further reading: I. Beniaminy, 2007). Giving up love for the subject, freedom, health, exercise, learning, aesthetics, creativity, curiosity, or any other of our many goals, will result in a world we don't want to live in.

A super-intelligence striving for the goal of "world peace" could do so by exterminating the human race

Breakers of intuition

It is difficult for us to detach ourselves from the intuitions based on the human dogma, the only one available to us. But some powerful inhuman optimizers are known to us, and with their help we can see that the properties of the human mind are not necessary for achieving goals.

Evolution is an abstract force that optimizes gene reproduction in a certain population in a given environment through natural selection. Evolution has no embodiment, no consciousness, no imaging ability, no memory or predictive power; But evolution is superhuman in some areas: it created tigers, pines and humans. Evolution is not only superhuman and inhuman but also inhuman: it created aging, diseases, parasites and other types of suffering that would have been seen as a terrible evil if a person had created them.

Markets are another type of strong optimizer. A market increases the utility of all sellers and buyers operating in it. Markets are not perfect, as we have clearly seen in the recent economic collapses, but they give a better result than a small group of people making economic decisions for everyone. Markets, like evolution, have no body, no consciousness, and no compassion or feelings of any kind, beyond what each individual participant in the market has.

Another type of superhuman optimizer is only theoretical: the perfect optimizer. Imagine a being that instantly, experimentally, obtains everything requested. Such a being is not limited by any human attribute, such as morality or emotions. The mathematical model AIXI (the nickname consists of the initials of Artificial Intelligence and the Greek letter X, "because", which represents the function measured in it) describes an abstract entity, which goes through rounds of interaction with the environment, and receives input as well as a measure of reward, and chooses an action. AIXI examines in each round Any algorithm is possible and chooses the one that maximizes the expected utility. Since there are an infinite number of possible algorithms and the calculation of maximum utility is not computable (on a Turing machine or any other computer), AIXI is not applicable, but it represents a theoretical model for the concept of "maximum intelligence", and of course, it does not have any human qualities.

Obviously, artificial general intelligence, when it comes, will not resemble these examples. But internalizing the characteristics of such nonhuman optimizers helps us understand the many possibilities for future beings that do not exist in the present.

An artificial general intelligence that will not eliminate any possibility of a good and useful human future is called "friendly artificial intelligence" (the phrase is a technical term, referring to an intelligence that benefits humanity, and not to friendship in the usual sense). In order to achieve a friendly artificial intelligence, an initial artificial general intelligence must be created that fulfills two conditions: a. Its goals should benefit the mother of humanity; B. She should maintain her goals, and not change them, during her self-improvements (and see for further reading: Bostrom & Yudkowsky, 2012).

In order to maintain the goals we will have a strong ally: the intelligence itself. After all, changing the initial goal lowers the probability of achieving that goal, and therefore a strong intelligence will fight against any change in its goals (humans sometimes change their goals, but we are weak goal achievers compared to super intelligence).

It is very difficult to define goals that correspond to the desires of human beings, but at least these desires lie in a known reservoir - our minds. We have to weigh the true desires of all human beings, and take into account that these desires, even in the head of a single person, are confused and contradict each other. A tremendous challenge, but even here artificial intelligence could, if planned well, help us refine the goal of "doing good to humanity", as long as we make sure that it understands that its initial goal is not clear enough. She will of course not change her goals, but may help clarify what we humans really want from her.

Research today

The research on friendly general intelligence is in its infancy. The two challenges for research are defining a correct set of goals, and designing the brain so that it maintains its goals while improving itself. For this purpose, researchers need to combine knowledge from diverse fields such as decision theory, information theory, formal logic and evolutionary psychology. Today, the two leading research centers in the field are the Institute for the Future of Humanity at the University of Oxford and the Singularity Institute for Artificial Intelligence (and see: for further reading) the Singularity Institute in Silicon Valley. We are witnessing a growing interest in the subject, both because of the intriguing theoretical aspects, and also because of the implications for the future of humanity. In 2011-12 the field was revived: the well-known Australian philosopher Prof. David Chalmers published an article on the subject, which resulted in a special edition of the Journal of Consciousness Studies, and a collection of articles edited by Prof. Amnon Eden and his colleagues, which is about to be published next year the nearest

biography

Yehoshua Fox works at IBM, where he leads the development of software products. Before that he worked as a software architect in several hi-tech companies in Israel. He holds a PhD in Semitic Linguistics from Harvard University and a BA in Mathematics from Brandeis University. On top of that, he is a research associate of the Uniqueness Institute for Artificial Intelligence. Links to his articles are available on his website: joshuafox.com

for further reading:

Israel Beniaminy. 2007. Don't burn the cat. The Future of Things

Nick Bostrom & Eliezer Yudkowsky, 2012, The Ethics of Artificial Intelligence To appear in The Cambridge Handbook of Artificial Intelligence, eds. W. Ramsey and K. Frankish (Cambridge University Press).

Friendly AI

Ray Kurzweil. 2005. The Singularity is Near: When Humans Transcend Biology. New York: Viking Press.

Singularity Institute

The full article was published in Galileo magazine, May 2012

19 תגובות

In my opinion, there is confusion here between the terms optimization, maximization, algorithm and artificial intelligence.

In short, I mean only this - an algorithm in a weapon system that calculates a trajectory is not artificial intelligence! Maximization of Shakhaina artificial intelligence coverage, as well as fuel consumption calculation.

A true artificial intelligence is one that can find a new law from observation, without knowing what the purpose of the observation is. From here, for example, a robot with a sense of vision can shine in the sun rising and setting every day. As soon as this robot will care about the rising sun, and he will say to himself - hmm... apparently the sun surrounds me every day... Then he will start to look at the stars and think - but these spiral routes are too complicated and I have not seen anything like this in nature before - rolly my assumption is wrong! - This is artificial intelligence.

I have read all of Kurzweil's books and he is an amazing thinker who has also done more than me in my short life, although I am only a husband. 2nd degree in engineering already in high school I learned this: there is a difference between philosophy, mathematics, physics and engineering.

Philosophy is the essence of thought, as it is written in the Book of Wisdom: wisdom, understanding and knowledge.

The sweetener is that you will prove whether the thought is possible or not - this. The essence of the sweetener: is there a solution, and if there is, is it unique.

Physics is the modeling of the forces of nature - if the confectioner has determined that it is Amsheri, how can it be modeled?

Engineering is the physical realization of physics - I want Zev to be done, within three seconds...

We humans build smart tools, no doubt, and sometimes we think it's artificial intelligence. And although these tools have physical and mortal engineering capabilities, they are friends with the "desire" to discover things on their own.

This is essentially the essence of a Turing machine.

My personal opinion is that we can only imitate ourselves and nothing more.

Highly recommended reading:

Get ready for another round in the battle of man versus computer

The "Eureka" software has already reproduced Newton's second law and managed to describe a complex movement of flapping wings. Now scientists are working to understand the meaning of the simple description she offers for the behavior of a bacterium.

Did Prof. Hood Lipson find the formula for creating discovery software?

http://www.haaretz.co.il/magazine/1.1625701

תיקון

AIXI is not applicable when you want to maximize, but it is applicable when looking for an algorithm that will return a result that will pass

a certain threshold.

Why is the AIXI model not applicable?

The number of algorithms is indeed infinite, but the length of each algorithm is finite,

Therefore, if we go through the series of algorithms according to their length, in the end - maybe after a long time, but a finite time -

We will get to the correct algorithm (the diagonal method).

I answered wrong.

It already exists, it's called Google

They don't want to destroy us. on the contrary. They will do as much as they can to keep us from ceasing to exist. But our instinct for self-destruction will overcome.

Another question, if the robot is powered by nuclear power, why would it need sunlight according to your perception?

Moti, why do you think we won't be able to build a spaceship (probably huge) that will contain all the things you mentioned? Don't forget that we all actually live on such a large spaceship that is round in shape, and we call it "Earth".

We as humans cannot reproduce in space.

We need oxygen, we need water, we need organic food and we need a temperature around 24 degrees.

Therefore only the Earth is our home.

On the other hand, if they build a robot with superhuman intelligence, it will only need sunlight to operate.

Such a robot will be able to move in space and exist in space, the universe will be its home. The complexity that will develop will be in the communication between him and us and between him and other creatures.

They won't want to destroy us, they have the whole universe.

Asaf

XP is threatening me, what should I do?

Someone, you need to differentiate between artificial intelligence and intelligent machine. For example, a dedicated system for a windbreaker that will know when to release the bullet is just a smart machine and is not busy with various thoughts such as whether it is worth traveling from here or there, the function of travel will be replaced by another dedicated computer whose purpose is to choose travel routes.

The fear of computers taking over is from an "all knowing" computer that is supposed to think in a human way, meaning to solve general and not specific problems. Such computers can be regarded as advisors, but therefore there is no need for them to activate anything. Humans only consume information from them and nothing else.

Asaf, you are wrong, already today more and more elements of artificial intelligence are being integrated into various weapon systems (aircraft, missiles, defense systems). The IDF's "wind jacket" that is installed on tanks, it is supposed to detect within a fraction of a second a missile fired at the tank, and within an additional fraction of a second launch a countermeasure in the right direction. If the system had to wait for a manual push of a button, it would have ended very badly)

And here's another example of how you're underestimating its importance:

http://www.mako.co.il/men-magazine/firepower/Article-eb7dc3cac5ffd21006.htm

Why would artificial intelligence have access to the physical world?

To make a super smart brain you need the ability to communicate with it. You don't need a genius mind to operate, say, a tractor. Therefore there is no reason for computers to take over the world.

Danny, thanks, but what does that mean anyway?

Laurie: A bit like non-soap 🙂

http://www.tapuz.co.il/blog/net/ViewEntry.aspx?entryId=1065939&skip=1

Can someone explain to me what "non-human" intelligence is?

From the moment a more intelligent entity is created - we will lose the ability to control its destiny and our destiny

"A. Its goals should benefit the mother* of humanity"

With*-a bit awkward for Galileo.