The laws of physics may certainly prevent the human brain from continuing to develop into a thinking machine that is steadily getting stronger.

Santiago Ramon y Cajal, the Spanish biologist who won the Nobel Prize, who mapped the anatomy of insects in the decades before the First World War, simulated the tiny circuits of the nerve cells responsible for the visual processing of the insects into an incredibly delicate pocket watch. He compared the circuits of the mammals to a large grandfather clock with a hollow case. Indeed, there is something humbling in the thought that a honey bee, with its milligram brain, can perform tasks such as navigating mazes and across open spaces with no less success than that of mammals. The honey bee may be limited by the small number of its nerve cells, but it certainly manages to squeeze as much as possible out of them.

At the other end of the spectrum, Phil, with his five million times bigger brain, suffers from the inefficiencies of a giant Byzantine empire. Neural signals need 100 times longer to get from one side of the brain to the other, and also from the brain to the foot, which forces the animal to rely less on reflex reactions, move more slowly and waste precious brain resources on planning each step.

Although humans are not found in the extreme ranges of body dimensions, like bees or elephants, only a few are aware that the laws of physics place strict constraints on our mental skills as well. Anthropologists have speculated about anatomical barriers to brain expansion, such as the question of whether a larger brain could have passed through the birth canal of a bipedal human. But assuming that evolution is able to solve the problem of the birth canal, we must discuss the heart of deeper fundamental questions.

Credit: Brown Bird Design

One could think, for example, that evolutionary processes could increase the number of nerve cells in the brain or increase the rate at which they exchange information, and that such changes would make us smarter. But if we examine recent research trends, look at them as a whole and follow the conclusions derived from them, they seem to indicate that adaptations such as these will quickly encounter physical limits. Ultimately, these limits arise from the very nature of the nerve cells and the statistical noise that characterizes the chemical exchanges through which they communicate with each other. "Information, noise and energy are inextricably linked," says Simon Laughlin, a neuroscience theorist at the University of Cambridge. "This relationship exists at the thermodynamic level."

If so, do the laws of thermodynamics determine a limit to intelligence based on nerve cells, a limit that applies universally to birds, primates, dolphins and manta rays? This question has probably never been asked in such broad terms, but the scientists interviewed for this article generally agree that it is a question worth exploring. "This is a very interesting point," says Vijay Balasubramanian, a physicist who studies neural encoding of information at the University of Pennsylvania. "I've never seen this point discussed even in science fiction."

Intelligence is of course a loaded word: it is difficult to measure and even define. Still, it seems fair to say that by most methods of measurement, humans are the most intelligent animals on earth. But as our mind developed during evolution, did it approach the hard limit of its ability to process information? Is it possible that there is some physical limit to the evolution of intelligence based on nerve cells - and not just for humans but for all life forms as we know them?

That hungry parasitic worm in your head

Intuitively, the most obvious way to us that minds can be made stronger is by growing them. Indeed, the possibility of a link between brain size and intelligence has fascinated scientists for more than 100 years. Biologists devoted a significant part of the late 19th and early 20th centuries to the study of central tenets of life - mathematical laws related to body mass and brain mass in particular, which apply to the entire animal kingdom. One advantage of size is that a larger brain can contain more neurons, allowing for increased complexity as well. But it was already clear then that not only the size of the brain determines the degree of intelligence: a cow carries in its head a brain much more than 100 times larger than that of a mouse, but the cow is not smarter than it. Instead, it appears that brains that grow in tandem with body growth carry more trivial functions: larger bodies may, for example, put more work on neural maintenance tasks unrelated to intelligence, such as tracking more touch-related nerves, processing information from larger retinas in the eyes, and controlling the operation of More muscle fibers.

Eugene Dubois, the Dutch anatomist who discovered the skull of Homo erectus On the island of Java in 1892, he wanted to find a way to measure the intelligence of animals, which would be based on the size of their fossilized skulls, and he worked on defining a precise mathematical ratio between the size of the brain and the size of the body of animals - on the assumption that animals with disproportionately large brains would be Smarter too. Dibow and others have amassed a growing database of brain and body weights; One classic paper reported the body weights, organs, and glands of 3,690 animals, ranging from woodpeckers to yellow-billed herons to two-toed and three-toed sloths.

Dibow's successors found that the brains of mammals grow more slowly than their bodies, up to approximately ¾ of the body mass. Thus, a guinea pig whose body is 16 times larger than a mouse's has a brain about 8 times larger than a mouse's. From this insight grew the tool that was looking for a guess: the encephalizaton quotient, which compares the brain mass of a biological species to the one it is expected to have based on body mass. In other words, the quotient indicates to what extent the biological species deviates from the ¾ power rule. Humans have a dose of 7.5 (our brains are 7.5 times larger than expected according to the rule), dolphins have a dose of 5.3; Monkeys have a dose of 4.8 and bulls, no surprise here: they hover around 0.5. In short, intelligence depends on the size of the neural stores left after the brain's maintenance tasks, such as attending to skin sensations, have been properly handled. Or, to summarize the matter: intelligence may depend on brain size at least superficially.

As the brains of birds and mammals grew, they almost certainly benefited from economies of scale. For example, in a larger brain, a single signal between neurons is able to travel through a greater number of neural pathways, meaning that each signal may implicitly contain more information, suggesting that neurons in larger brains can handle fewer signals per second. But a competing trend may have intervened in the story in the meantime. "I think it is very likely that there is a law of 'diminishing returns' to infinitely increasing intelligence by adding new brain cells," says Balasubramanian. The size also poses difficulties, and the most obvious difficulty is an increase in energy consumption. In humans, the brain is already the hungriest organ in our body: at a size of only 2% of the body's weight, this greedy organ devours 20% of the calories we spend at rest. In newborn babies, this is an amazing rate of 65%.

keep in touch

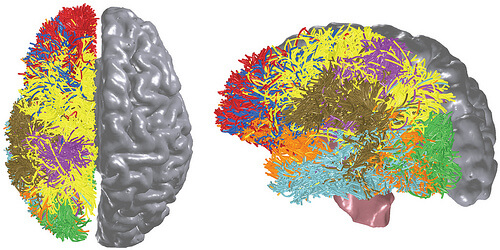

A large part of the energy burden of a large brain comes from the communication networks in it: in the cerebral cortex of a person, communication occupies 80% of the energy consumption. But it seems that as size increases, neural connectivity also becomes more challenging for more complicated structural reasons. In fact, even while biologists were still engrossed in collecting data on brain mass in the first half of the 20th century, they were already embarking on a more challenging initiative: defining the "design principles" of brains and finding how these principles are preserved in brains of different sizes.

Each typical nerve cell has a long tail called an axon. The axon branches at its end, and the ends of the branches form synapses, or points of contact, with other cells. Axons, like telegraph cables, can connect different parts of the brain, or join nerves that extend from the central nervous system to different parts of the body.

As part of their pioneering efforts, the biologists measured the diameter of the axons under a microscope, and calculated the size and density of the nerve cells, and the number of synapses in each cell. They surveyed hundreds, and sometimes thousands of cells in each brain in dozens of biological species. Seeking to improve their mathematical curves by extending them to larger and larger animals, they even found ways to extract whole brains from retarded whales. The five-hour process, painstakingly described by biologist Gustav Adolf Goldberg in the 80s, involved using a saw commonly used for sawing logs operated by two people, an axe, a chisel and a lot of muscle power to remove the top of The skull was like a tin can.

These studies have shown that as brains become larger from one biological species to another, some subtle but apparently difficult changes occur. First, neurons become larger on average. This phenomenon allows more connections between nerve cells in a large brain. But larger cells cannot be densely grouped in the cerebral cortex, so the distance between them increases, and so does the length of the axons required to connect them. And since longer axons take longer for signals to pass between cells, these extensions must be thicker to maintain the speed of communication (thicker axons carry signals faster).

The researchers also found that as brains get larger, they divide into an increasing number of distinct regions. You can see these areas when you stain brain tissue and look at it under a microscope: different areas of the cerebral cortex are stained differently. These areas often correspond to certain brain functions, such as speech understanding or face recognition. And as the brains grow, the functional specializations are revealed in another dimension: parallel areas in the left hemisphere and the right hemisphere of the brain acquire different functions - for example, spatial thinking versus verbal thinking.

For decades, this division of the brain into an increasing number of functional sections was considered a sign of intelligence. But it also reflected a simpler truth, says Mark Changizi, a neurobiology theorist at 2AI Labs in Boise, Idaho: Functional specialization compensates for the connectivity problem that arises as brains grow. When moving from a mouse brain to a cow brain containing more than 100 times more neurons, there is no way for neurons to grow sufficiently so that the connections between them continue to function as well. This problem is solved by separating nerve cells with similar functions into subunits, modules, which are linked between them, and with much less long-term connections between the modules. The different specialization between the right hemisphere and the left hemisphere solves a similar problem; It reduces the amount of information that must pass between the hemispheres, which minimizes the number of long interhemispheric axons that the brain needs. "All these things that seem complicated in big brains are just the 'eights in the air' that the brain has to do to answer the problem of connectivity", the one that the brain is bigger, Changzi claims. "That doesn't tell us he's smarter."

Jan Karbowski, an expert in computational neuroscience at the Polish Academy of Sciences in Warsaw, agrees. "The brains have to achieve optimization in some way, between several metrics at once, and there must be offsets and compromises," he says. "If you want to improve one thing, you spoil something else." What happens, for example, if you enlarge the cerebral cortex (the bundle of axons connecting the right and left hemispheres) sufficiently to maintain constant connectivity as brains grow? And what if these axons are thickened so as not to increase the delay in the passage of signals moving between the hemispheres as the brains grow? The results will not be pleasant. The hemisphere will expand and move the hemispheres apart until soon any improvement in performance is neutralized by the distance.

These mutual offsets are strikingly revealed by experiments showing the relationship between axon width and conduction velocity. In the end, says Karbowski, nerve cells do grow as brains grow, but not at a sufficient rate for them to remain well connected. And the axons do thicken as the brain grows, but not at a sufficient rate to compensate for the longer delays in conduction.

Preventing too rapid condensation of the axons not only saves space, but also energy, says Balasubramanian. Doubling the width of the axon doubles the energy expenditure, and increases the conduction speed by only about 40%. Even with all these corner alignments, as brains grow, the volume of the white matter (axons) still increases faster than the volume of the gray matter (the nerve cell bodies that contain the cell nucleus). In other words, as brains get larger, more of their volume is dedicated to connections than to the parts of the individual cells that perform the computation itself, suggesting that an increase in size is ultimately unsustainable.

The supremacy of primates

In this dismal state of affairs, it's easy to see why a cow can't squeeze out of her grapefruit-sized brain a little more intelligence than a mouse whose brain is the size of a blueberry. But evolution has managed to make some very impressive detours at the level of the brain's building blocks. When John H. Kass, a neuroscientist at Vanderbilt University, and his colleagues compared the morphology of brain cells between brains from a wide variety of primates in 2007, they encountered a factor that turned the picture on its head, something that probably gave humans an edge.

Kass found that, unlike in other mammals, neurons in the primate cerebral cortex grow very little as the entire brain grows. Few neurons do grow, and it is possible that these rare cells carry the burden of maintaining good connectivity. But most cells do not grow. Thus, while the brains of primates from different biological species are getting bigger, their nerve cells still remain packed together with almost the same density. Therefore, in the transition from earlier to later monkeys - with a doubling of brain mass - the number of nerve cells is almost doubled, compared to rodents in which a similar doubling of brain mass is accompanied by only a 60% increase in the number of nerve cells. This difference has far-reaching consequences. Humans have 100 billion neurons in 1.4 kilograms of brain; To reach such a number of nerve cells, a rodent that would have obeyed the rule of growth in nerve cells typical of its species, would have had to carry a brain weighing 45 kilograms. And metabolically, all that brain matter would have gobbled up all the poor animal's energy. "This may be one of the reasons why large rodents do not appear to be any smarter than small rodents," Kass says.

A structure where the cells are smaller and more densely packed probably has real implications for intelligence. In 2005, neurobiologists Gerhard Roth and Ursula Dicke, both from the University of Bremen in Germany, surveyed several traits among different biological species, the presence of which predicts intelligence (roughly measured by behavioral complexity) more effectively than encephalization quotient. Roth says that there is "a strong correlation only between intelligence and the number of nerve cells in the cerebral cortex and the speed of neural activity" which decreases with decreasing distance between nerve cells and increases with the amount of myelin that wraps the axons. Myelin is a fatty insulating material that allows axons to transmit signals more quickly.

If Roth is correct, the small primate neurons thus have a twofold effect as the brain grows: first, they allow a greater increase in the number of cortical cells, and second, they allow faster communication because the cells are more densely packed. Elephants and whales are quite intelligent, but their larger neurons and larger brains lead to a lack of efficiency. "The packing density of the nerve cells is much lower in them," says Roth, "which means that the distances between the nerve cells are greater and the speed of nerve impulses is much lower."

In fact, neuroscientists have recently seen a similar pattern in the differences between humans: people with the fastest lines of communication between brain regions are probably also the smartest. One study, conducted in 2009 by Martin P. van den Hovel of the University Medical Center Utrecht in the Netherlands, used functional magnetic resonance imaging to measure how different opposing brain areas talk to each other—that is, whether they communicate through a large or small number of intermediate areas. . Van den Hovel found that shorter communication pathways between brain regions were directly related to higher IQ. Edward Bellmore, an imaging neuroscientist at the University of Cambridge, and his colleagues obtained similar results the same year using a different approach. They compared working memory (the ability to keep several numbers in memory at the same time) between 29 healthy people. Then they recorded magnetoencephalographic measurements from the participants' scalps to gauge the speed of communication between their brain regions. People whose brains had the most direct communication and the greatest speed of transition between areas also had the best working memory.

This is a significant insight. We know that as brains get bigger, they save space and energy by limiting the number of direct connections between the regions within them. In the great human mind there are relatively few of these long-term connections. But van den Havel and Belmore have shown that these rare connections, which operate without interruption, have a marked and disproportionate effect on intelligence: it is enough for resource-strapped minds to give up just a few such connections to deteriorate into markedly poorer performance. "There is a price for intelligence," Bellmore concludes, "and the price is that you can't just minimize your connection cables."

The design of intelligence

If communication between nerve cells, and between areas of the brain, is indeed a big barrier that limits intelligence, then the development of nerve cells that are even smaller (and therefore more dense and have faster communication) should produce smarter minds. Similarly, brains may be made more efficient by developing axons that can carry signals faster over longer distances without being thicker. But something prevents the animals from shrinking nerve cells and axons beyond a certain limit. You can call it the "mother of all limitations": the proteins called ion channels, which nerve cells use to generate electrical impulses, are unreliable by their very nature.

Ion channels are like tiny valves that open and close due to changes in their molecular arrangement. When they are open, they allow sodium, potassium or calcium ions to pass through the cell membrane, creating the electrical signals that nerve cells use to communicate with each other. But because they are so tiny, ion channels can only open or close due to thermal fluctuations. A simple biological experiment reveals the defect in all its layers: isolate one ion channel from the surface of the nerve cell by using a microscopic glass tube, an operation similar to trapping one ant on the sidewalk in a small glass cup. When you adjust the voltage in the ion channel - an action that should cause it to open and close - the channel does not open and close in a reliable way like the lamp in the kitchen that responds to pressing the switch. Instead, it oscillates between an open and closed state randomly. Sometimes it doesn't open at all; Other times it opens when it shouldn't. All that is achieved by changing the voltage is a change reasonably that it will open.

This sounds like a terrible, terrible flaw in evolutionary design, but in fact, it is a compromise. "If the spring of the channel is too loose, the environmental noise presses on it all the time," says Laughlin - something that happens in the biological experiment described here. "If you tighten the spring on the channel more, there is less noise," he says, "but now more work is required to activate it," which will force the neurons to invest more energy in controlling the ion channel. In other words, neurons conserve energy by using ion channels that are highly sensitive to activation, but the side effect is that they can open or close randomly. The meaning of the compromise is that the ion channels are only reliable if they are found in large numbers, so that they seem to "hold a vote" and choose whether a nerve cell will generate an impulse or not. But pointing becomes problematic as the neurons get smaller. "When you reduce the number of nerve cells, you also reduce the number of ion channels capable of transmitting the signal," says Laughlin. "And that increases the noise."

In two papers published in 2005 and 2007, Laplin and his research partners calculated whether the need to include a sufficient number of ion channels limits the possibility of shortening the axons. The results were amazing. "When axons got to about 150 to 200 nanometers in diameter, they became unbearably loud," says Laughlin. At this point, an axon contains such a small amount of ion channels that the accidental opening of one ion channel can prompt the axon to send a signal even if the neuron did not intend to fire. It is likely that the smallest axons in the brain already, at their current size, emit about six such accidental firings every second. Shrink them just a tiny bit more and they'll fire more than 100 shots per second. "Neurons in the gray matter of the cerebral cortex work with axons that are very close to the physical limit of their capacity," Laflin concludes.

This basic compromise between information, energy and noise is not unique to biology. It applies to everything from fiber optic communications to amateur radio transmitters and computer chips. Transistors act as the gatekeepers of electrical signals, just like ion channels. For fifty years, engineers have steadily shrunk transistors and crammed more and more onto chips to produce faster and faster computers. The size of the transistors in the newest chips is 22 nanometers. At such sizes, the task of adding another element to silicon uniformly becomes very challenging (small amounts of other elements are added to silicon chips to tune the properties of the semiconductor). By the time they reach 10 nanometers, transistors will be so small that the random presence or absence of a single hole atom will cause them to behave unpredictably.

Engineers may be able to circumvent the limitations of current transistors by going back to the drawing board, and redesigning chips that will use entirely new technologies. But evolution cannot start from scratch: it has to work within the planning frameworks and with the parts that have existed for half a billion years, explains Heinrich Reichert, a developmental neurobiologist from the University of Basel in Switzerland - it's like building a warship from modified parts of an airplane.

Moreover, there is another reason to doubt that a great evolutionary leap could lead to smarter minds. Biology may have had a wide range of developmental directions when neurons first evolved, but after 600 million years something interesting happened. The brains of a bee, an octopus, a crow, and intelligent mammals don't look at all similar at first glance, Roth says. But if you look closely at the circuits of connections that underlie tasks such as vision, smell, navigation and fleeting memory of sequences of events, "surprisingly they all have exactly the same basic arrangement." Such evolutionary convergence usually indicates that certain anatomical or physiological solutions have reached such maturity that there may not be much room for further improvement.

If so, life may have reached an optimal neural pattern. This pattern is networked with connections designed step by step, where the cells in the growing embryo communicate with each other by signaling molecules and physical impulses; And this pattern is evolutionarily well entrenched.

Bees do this

Well, have humans reached the physical limits of how complex their minds can be, given the building blocks available to us? Laplin doubts that there is a hard limit to brain function in the same way that there is a limit to the speed of light. "It is more likely that this is just about all the diminishing returns," he says. "It becomes less and less worthwhile the more you invest in it." Our brain can only contain a certain number of nerve cells; Our neurons can only have a certain number of connections between themselves; And these bonds can only carry a certain number of electrical impulses per second. Moreover, if our bodies and brains were to grow to a great extent, there would be a price to speak of in terms of energy consumption, heat waste and the very time it would take for nerve impulses to travel from one side of the brain to the other.

However, it is possible that the human brain has better ways to grow without needing further biological evolution. As the bride of everything, the honey bee and other social insects do this: acting in concert with their hive sisters, they create a collective entity that is smarter than the sum of its parts. Through social interaction, we too have learned to pool our intelligence with that of others.

And also, there is technology. For thousands of years, written language has allowed us to store information outside of our bodies, beyond our brain's ability to store things in memory. It can be argued that the Internet is the most extreme result of this trend towards the expansion of intelligence outside the body. In a way, it may be true to say, as some say, that the Internet is making us stupid: collective human intelligence – culture and computers – may have reduced the drive to develop smarter individuals.

_________________________________________________________________________________________________________________________________________________________________

About the author

Douglas Fox is a freelance writer living in San Francisco. He is a frequent contributor to New Scientist, Discover and The Christian Science Monitor. Fox has won several awards, the most recent of which, an award in the category "reporting on a significant issue", from the American Association of Journalists and Writers.

And more on the subject

Evolution of the Brain and Intelligence. Gerhard Roth and Ursula Dicke in Trends in Cognitive Sciences, Vol. 9, no. 5, pages 250–257; May 2005.

Cellular Scaling Rules for Primate Brains. Suzana Herculano-Houzel, Christine E. Collins, Peiyan Wong and Jon H. Kaas in Proceedings of the National Academy of Sciences USA, Vol. 104, no. 9, pages 3562-3567; February 27, 2007.

Efficiency of Functional Brain Networks and Intellectual Performance. Martijn P. van den Heuvel, Cornelis J. Stam, René S. Kahn and Hilleke E. Hulshoff Pol in Journal of Neuroscience, Vol. 29, no. 23, pages 7619–7624; June 10, 2009.

19 תגובות

Wow what an article!

It makes me think of doing a degree in neurobiology

Chemistry and receptors

The article is much more interesting than your empty comment. If you are not interested in an in-depth article, then go read it on Ynet.

Today by chance something happened to you and the order of the chemical dose in the brain changed. You will already see a different world, different thoughts and different new theories that no one has ever seen or experienced such a concept and of course it also depends on the people and so many other variables so please write a new interesting truth or discovery and not anyone's theoretical material A scholar who gives a theory like I will give without any understanding beyond the human box something and not from a bad place from a place of desire to learn Forgiveness and thanks and peace

For "I'm just a question":

Your questions:

First question, how do you explain the difference in intelligence between different people?

Second question, is there a difference in the size of the brain?

Third question, is Einstein's brain, for example, bigger than the brain of an average person?

Fourth question, do especially intelligent people have more neural pathways...?

My answers:

First answer, the only difference in the level of intelligence between healthy people is in the type of mental skills that are sought in an intelligence test.

Each person has their own skills that come from the shape and the way their brain works, but intelligence tests only test certain types of mental skills.

"Mansa" organization (http://www.mensa.org.il/) is aware of this and improves his tests in order to detect as many types of mental skills as possible).

Second answer, there is a difference in the size of the brains of different people, but the difference is not very big, and its meaning is only in the speed of communication between the neurons (this speed has value against a person with a larger brain, but not necessarily against a person with a different brain composition).

Third answer, I don't know, but that's not what made Einstein as intelligent as he was, on the contrary, the size of people's brains, it would have been more beneficial for him to have a smaller brain (faster connection between the neurons), a large brain would mainly require more energy to operate and less quick thinking

Fourth answer, especially intelligent people don't necessarily have more neural pathways, maybe some of them do.

Highly intelligent people are so for one reason only, that their strongest skills are the skills that are more "in demand" socially and/or mentally.

Answer to Eran (according to him in the 4th response to the article):

a) If you mean the myth of using up to 10% of the brain's capacity, the myth has been debunked:

http://he.wikipedia.org/wiki/%D7%9E%D7%99%D7%AA%D7%95%D7%A1_%D7%A2%D7%A9%D7%A8%D7%AA_%D7%94%D7%90%D7%97%D7%95%D7%96%D7%99%D7%9D_%D7%A9%D7%9C_%D7%94%D7%9E%D7%95%D7%97

http://cafe.themarker.com/post/109720/

The question is, even if we use almost the whole brain (of course according to the needs of each situation) is the extent of our use maximal?

I believe not.

Another question, what will happen if evolution allows a person to more easily use the abilities of the subconscious (the subconscious remembers everything, understands much more complicated concepts than the conscious is capable of)?

One of the things that will happen to any average person will be the ability of Sherlock Holmes.

b) On the topic of the high brain abilities of people beyond the "ordinary person on the street":

We are not talking about "special" people, every "average" person has talents that exceed the limit of the average human being, this is a result of the specific composition of every human brain.

What people do not understand is that not every ability of a person is useful in terms of the composition of the world in which we live and not every person understands what their abilities are that go beyond the normal.

Increasing a certain ability really comes at the expense of another ability, but a certain ability of some person does not have to be one specific thing (running, remembering things, calculating), but can also be something that has no real specific definition, but a sort of "collective ability" like For example, an understanding of computers or numbers (if you have "Yes Action" on TV, look for the series "Numbers") or in psychology or engineering.

There are geniuses or "mentally gifted" who have almost no ability to understand "social conventions" (at best, they will be able to remember what they did wrong after being explained to them).

Glad I could help. 🙂

The translation is excellent!

http://www.youtube.com/watch?v=pzTmm_I0Rdo

The writer referred to the differences between different animals, but how do you explain the difference in intelligence between different humans? Is there a difference in brain size? Is Einstein's brain, for example, bigger than the brain of an average person?

Do particularly intelligent people have more neural pathways...?

Danish,

What you say is based on an assumption that I think stems from a basic misunderstanding about the nature of theories about the world

You implicitly assume that there is a mind that produces theories just like that, if it really were so you would be right in your arguments. But the situation is different! According to the theories, the brain is part of a reality that is made up of building blocks and operates by certain laws.

The brain tries to find out these laws, and that's all it does, to find order and connection between one thing and another. And thus he also includes himself who is himself part of that orderly world that operates according to the laws of physics.

The inability to explain why this is so does not cause any contradiction, only a lack of knowledge, not a contradiction. We simply assume that we are indeed describing the laws of the world. An assumption does not mean a contradiction, but a lack of knowledge.

Your idea that theories can change is indeed correct, but that didn't stop you from starting to write your comment on the science site. Although all the physical theories according to which all those thousands of components were designed (the keyboard, the cable on the motherboard, the processor, the memory, the graphics card, the router, the telephone line, the telephone infrastructure, the Internet servers, etc., etc., etc.) could have changed and it is enough that in a millionth of a millionth of a percent there was something changing in the theories and the entire response Yours would not pass. In general, the whole business would collapse if that happened.

In short, if there is a contradiction here, it is in your writing, where on the one hand you claim that the theories should not be trusted and on the other hand you even started writing the response relying on the theories which you refute.

Look for the contradiction yourself.

On the one hand,

The level of writing in Fox's article,

Like Luke, she amazed me too,

no less than the wonders of the mind,

On the other hand,

It's not surprising at all.

After all, Fox used it to create the article.

(The translation is excellent in my opinion)

exciting! What an article!

Great article! Thanks!

The Internet is a brain on which each unit, a person, a computer, constitutes a nerve cell. The unit receives information from tens of thousands of sources and emits one product or one channel. Communication times on the Internet today are of the order of 100mSec

Similar to the orders of magnitude inside the brain.

It doesn't have to be only in the field of information, for example in the field of sandwich food, hundreds of people are involved in its production and we only perform one operation on it.

The writer ignored a fundamental contradiction on the subject.

The brain is not a calculating machine but primarily a creative device. All the scientific theories on which the scientific and technological world is based are the fruit of the mind. Including the assumptions regarding thermodynamics and the like that the writer is based on.

And since no one knows how to explain why the theories work, why the mathematics works and similar questions. That is, there is no general theory that explains the other theories. Obviously, such a theory contradicts itself.

After all, as the theories have been created so far, they may change because there is no preventing that in the future the brain will create new theories that are much more elaborate than the existing ones.

Therefore, dealing with the questions that the article raises is actually a looping paradox that cannot be answered with any answer.

Undoubtedly, one of the most fascinating and important fields.

Thanks!

Fascinating article, thank you.

I wanted to ask if there is any truth in the assumption that we do not use all the brain's capacity?

And if someone can answer me that there are special people who have much higher abilities than the ordinary person on the street (such as people who have an exceptional memory, amazing computational abilities such as Einstein) are these people evolution because it is clear that these abilities are unique because there are only a handful of them Chosen and if not, then will increasing one or more abilities in the brain limit it in other things?

Thanks

"The being of evolution" as the best brain engineer.

Amazing, I enjoyed every letter of the article.

Thanks

And there is a possibility that we ourselves will create a being smarter than us, which will of course bring our end.

Or we will create a synergy between external components and a person that will overcome the human mind.

There is an unasked question:

Is there an upper limit to consciousness/intelligence? I don't think so.

Is man the limit of consciousness/reason? No.

If so - biological or cultural evolution will find a way to produce a much more intelligent brain.