In order to adapt itself, the robot must decipher the human's actions in accordance with the fact that the human predicts the robot's actions, and bases this prediction on his feeling as if the robot has intelligence and goals

Israel Benjamin, "Galileo"

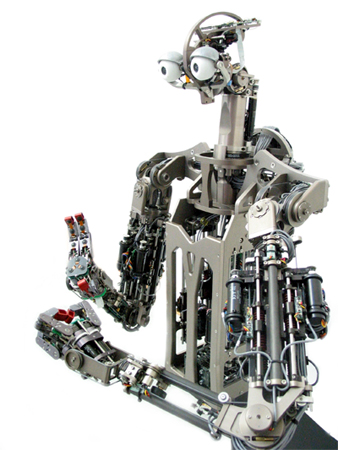

The robot Dumo

A play for two people (one of them digital)

Location: Laboratory in Cambridge, Massachusetts

order now

Participants: Aaron Edsinger, roboticist; Domo (Domo), a robot

Aaron: "Hey, Dumo."

Domo: "Domo."

Aaron: "A shelf, Dumo."

Dumo: "Shelf."

Domo looks around and locates a high surface, reaches out his right hand and touches the surface (making sure his eyes didn't mislead him).

Dumo reaches out his left hand to Aaron, and Aaron places a poly bag in the robot's open hand

Photo: Jay Vance

A luxury for the lazy? (Photo: Jay Vance)

coffee.

Domo moves the bag to his right hand and places it on the shelf.

Aaron: "A box."

Dumo: "box."

Aharon hands Domo a large box, and Domo holds it with both hands. Aaron moved back and to the left. Dumo moved so that the box was close to Aaron. Aaron puts some objects in the box.

Aaron: "A table."

Dumo: "table."

Domo places the box on the table.

(applause, screen)

This play is often shown in Edsinger's laboratory (without the applause and the screen), and it represents a situation of a person being helped by a robot in arranging objects in the house.

Those who are not involved in robotics may not notice this, but the Domo robot here demonstrates success in particularly difficult tasks: coordination of hands and eyes, manipulation of objects that the robot did not know before, and cooperation with a person through visual tracking of the person's movements and the objects that the person is holding. These are just some of Domo's capabilities, which is one of the first robots capable of performing a large number of tasks within the human space.

separate spaces

Robots appearing in science fiction are sometimes dangerous: they attack humans because they refuse to serve, and strive to free themselves from enslavement or to enslave (or kill) humans. The difference between imagination and reality is ironic: in reality, robots may be dangerous because we have not yet been able to teach them to relate to humans in their environment. In robotic production lines, for example, there are security fences that workers must not cross before turning off the robots.

Between these fences, the robots "dominate" - not as a result of aggression and conquest ambitions, but because the software that controls the robot is not ready (or not ready enough) for the possibilities of change in the environment in which the robot operates. The robot performs exactly the action it was designed for. This action may include fast movements, moving heavy objects and dangerous operations (such as welding or nailing). In 1979, the first death attributed to a robot probably occurred, at a foundry in Michigan. In 1981, a maintenance engineer in Japan was killed when he went through the security fence to repair a robot, but did not completely shut it down.

The solution adopted by the production lines is actually the separation of the spaces where the robots work from the spaces where humans may be found. This limitation greatly reduces the potential of using robots. If we want to work together with robots in the same space, they must be sensitive to our existence, our needs and our limitations.

Apart from the safety issues, there is another important reason for separating the action spaces: the human space cannot be seen in advance. Objects, animals and people may appear, move, change and disappear. Even if a robot is only designed for one task in one room, it must adapt to a huge variety of possibilities.

Let's take for example a robot whose sole function is to pick up dishes from the table, transfer them to the sink, wash them and place them in the drying facility. If we were to design this robot as one designs a robot for a production line, we would feed the robot's software a precise description of every tool it might find, and we would require the users to put each tool in a special place on the table. We also had to place the table and chairs precisely, make sure there were no obstacles on the floor and remove unnecessary objects from the table and the sink.

At the end of the preparations, we would leave the room before the robot starts to operate, and make sure that none of the household members or pets can enter. Most of us will ignore such products, and will only be interested when the robot can operate with us in the same space.

But do we really need a robot dishwasher? Aren't these luxuries meant only for tech addicts and the lazy? In fact, these technologies have a great significance for our lives in the future. In many of the developed countries, the aging population is expected to need help far beyond the capacity of the workforce (local workers along with foreign workers). Robots to help with housework would be a good start to tackle this important challenge.

cognitive challenges

What is required for a robot to function in the human space? It must be able to recognize objects relevant to its task, even if it has not encountered these objects before: for example, the dishwasher robot must be able to handle a new mug, which is different from the mugs and glasses it has encountered before.

He must "know physics" at the level of everyday life: where can a utensil be placed, and which side of the utensil should face down so that the utensil remains stable in its place after he releases it from his hands (the answer to this is different in different cases: placing the utensil on a shelf is different from placing it in the drying facility, and on the robot know that in the drying facility it is better to place glasses with their opening facing downwards, even though they are stable even with their opening facing upwards).

He must identify the objects in his environment that are required to perform the action - in our example, a table, a sink, dish soap, etc. He must identify obstacles and avoid them. He must treat people in particular, avoid harming them and interfering with their actions (imagine the robot taking dishes from the table during the meal), and be attentive to their needs as they are expressed in verbal commands or body movements.

These requirements are only part of the result of the transition to the common space, and they are added to the requirements concerning the performance of the task itself: in our example, the task is washing dishes. If we expect the robot to perform better than the dishwasher, it should, for example, detect stains. How do we distinguish between decorations on plates and dirt? How will the robot do it?

In fact, these requirements are quite general, and if the robot meets them, then it will not be limited to washing dishes only: it will be able to do much more. This is good news, because the limitations we defined when presenting the example are too strict - we assumed that the robot could only move dishes from the table to the sink and from the sink to the drying surface. Of course we would like him to also be able to collect dishes from additional surfaces, take a dish that is accessible to him, and transfer dishes from the drying surface to the storage cabinet (or dry the dishes himself). If it can do all this, the robot will be able to perform other tasks, at least those that involve collecting, cleaning and arranging.

On the other hand, if we greatly reduce the requirements, we can also find a place for much simpler robots that nevertheless perform useful actions in the human space. The most well-known example is the robot vacuum cleaners or lawnmowers: usually such a robot moves in a limited area, and within this area its equipment works in the same way (vacuum suction, lawn mowing).

The coverage of the area on which the robot is assigned is performed by combining attempts to systematically cover the area, along with random movements (to deal with obstacles and unexpected terrain shapes). For the ability to act in human space, these robots pay for inflexibility: not only are they limited to only one type of activity, but they are able to receive and process very little information from the environment (such as the location of walls, after encountering them). Therefore, although they work next to humans, it is difficult to say that there is any cooperation between them and us.

Anatomy of a robot

What hardware should the robot include? In general, it needs sensors to receive information about the environment and be able to act in the environment. The need for sensors that play the role of "eyes" to see the environment, and of "ears" to receive instructions is clear, but other senses must also exist: in particular, the robot must sense the resistance to its actions, if in order for it to know when it is gripping hard enough (and not too hard) in an object, either so that we can "grab his arm" and point him in another direction or so that he can estimate the mass of the object he is lifting (if he does not estimate the mass and weight of the object, he will not be able to plan a controlled movement of lifting and moving it).

To operate in the environment, the robot needs the ability to move: "arms" and "fingers" for grasping, movement of parts of the body or the whole, and moving the "eyes" to direct them to the desired area. You should also equip it with a voice to talk to its operators. There is a lot of logic in building such a robot in a way that imitates the structure of the human body: this structure is familiar to engineers, who can be inspired by the operation of biological systems; It is familiar to human users, who will be able to relate to it more easily; And it is suitable for the environment and objects designed for human use.

The hardware required for the robot is currently available off-the-shelf. The software is the hard part. In fact, software that achieves these achievements will already approach the cognitive achievements of some of the developed animals: awareness of the environment, cooperation, pre-planning of actions, use of tools and learning new skills.

It can also be argued that a certain level of self-awareness is required here: the robot must see itself as an "agent" within the environment, that is, an object that not only exists in the environment but also works within it to fulfill known goals, similar to how humans are "agents". Without such "awareness" it will be difficult to achieve cooperation between the robot and its human owners, because in the actions of the humans and their instructions there is a hidden assumption of the existence of the robot as an "agent". This unconscious assumption causes the human to act as if the robot has goals to strive for, as well as undesirable situations that the robot will strive to avoid.

For example, if a person orders the robot to "come here", and there is a table between them, the robot will go around the table to reach the required place. The person, who treats the robot as an "agent", expects this path of movement and already reaches out his hand in the direction from which he expects the robot to come.

Although the direction of extending the hand is not the direction of the straight line between the human and the robot, the robot must understand that the human intends to hand him some object, and already begin the action required to receive the object. We are all so used to such interactions that we are not aware that we are constantly predicting the behavior of the people around us. To feel the difference, let's think of a person operating a remote-controlled toy car: the operator knows that the car is not an "independent agent", and therefore will guide it in the correct route around the table, while he expects the robot to move in a "logical" route without guidance.

In order to adapt, the robot must decipher the human's actions in accordance with the fact that the human predicts the robot's actions, and bases this prediction on his feeling as if the robot has intelligence and goals. As this example shows, if the robot is not designed to see itself as an "agent", it will have difficulty understanding even simple instructions without tedious and detailed explanations and without the collision of its actions with the movements and needs of humans.

The MIT Robot Dynasty

Operation in a dynamic and changing environment? Did you understand instructions and follow them? Awareness of other agents' goals and self-existence as an agent? Such software does not exist today, but it is close in its requirements to the fifty-year-old ambitions (at least) of artificial intelligence.

One of the leading groups in this field is the group of humanoid robots ("humanoid" = human-like) of the Computer Science and Artificial Intelligence Laboratory at the Massachusetts Institute of Technology (CSAIL - Computer Science and Artificial Intelligence Laboratory, at MIT).

This group is part of the field "living and breathing robots" within CSAIL, whose activities can be read in the link at the end of the column. The group of humanoid robots,

Like other activities at CSAIL, it embraces the ideas of CSAIL director Rodney Brooks, one of the most influential researchers in the field of robotics.

In 1990, Brooks wrote an important article called "Elephants Don't Play Chess" (link at the end of the column), in which he claimed that in the first thirty years of its existence, artificial intelligence was mainly based on the "symbol system assumption", according to which intelligence is achieved by representing the world in symbols: the sensory system Identifies each object, each attribute and each action clearly and individually.

From here on, symbols can be regarded as disconnected from the tangible world, and what remains for the cognitive process is to process the information that came from the sensory system according to the known relationships between the symbols, learn new relationships and plan actions that will lead to the achievement of the goals (which are also represented by symbols). In the eyes of supporters of this approach, the separation of the symbols from the symbolized in the real world is a great advantage, because it allows the same general thinking mechanisms to solve many different problems.

For example, if there is a general mechanism for finding a way from the current state to a required state by referring to intermediate states and the actions that cause a transition between states, it is possible to activate the same mechanism to plan reaching some destination (around the table, to the door, to the other room, etc.) and to move a tool to its place (move your hand to the tool, hold it steady, lift it, etc.).

From this it can be seen that the behavior of the system does not arise from any particular part of the network of symbols, but from the interaction between all the parts: the state of the world is translated into symbols, abstract thinking mechanisms act on the symbols, until a symbol is activated which is translated into behavior that can be seen from the outside. This approach works well for chess, but is not suitable for explaining the behavior of "simple" animals, let alone the complex behavior of elephants, hence the title of Brooks' paper.

Brooks opposed this assumption with the "physical grounding hypothesis", according to which intelligence arises from a combination of parts, each of which is well connected to the physical world and operates within it. This approach proposes to see every intelligent system as being made up of parts, each of which contains a behavior, meaning that each of its parts receives some information from the world that surrounds it and reacts to this information in a way that affects the world. More complex and interesting behaviors are achieved through interaction between these parts.

So, for example, as we will see later, Domo does not plan all the steps of the action, but activates behaviors such as "moving to see from a better angle" or "getting closer to the object" - behaviors that both change the physical state and enable activation of the following behaviors.

This is not to say that Dumo lacks any planning ability, but rather that the detailed planning of some operations is carried out while other operations are being carried out, some of which generate additional information necessary for the continuation of the movement. These actions are also more immune to "surprises" (for example, when the table moves while approaching it) or inaccuracies in the calculations of the position of the table, the objects and the robot's body.

This principled approach corresponds to the ideas of several philosophers, such as Daniel Dennett, whose work is in the field of Consciousness. Dent collaborated with Brooks and his students in the design of the robot Cog (Cog - the name seems to allude to both "cognitive" and a tooth in a gear) in the mid-nineties. Kog was one of the first in CSAIL's line of humanoid robots (Brooks had previously developed robots that were more similar in design and purpose to insects).

In CSAIL and in robots like Cog and Domo, the meeting between the philosophy and the competing ideas about the nature of intelligence on the one hand, and the technological capabilities and practical needs on the other is expressed. It is not a purely successful collaboration between two fields: according to the view of Brooks and his students, it is impossible to progress in any of the fields by itself, because the mind is "physically grounded" - that is, it receives its very existence - through physical interaction with the world.

Collaboration, relevance and "movement thinking"

Three principles dictated the design of the Domo robot and its goals. First, "cooperative manipulation": at least for now, the robot's usefulness in the human space depends on humans guiding it. However, as long as the time savings achieved by using a robot is greater than the time required to guide it, even non-independent robots can be useful helpers.

If working together with the robot is intuitive, the person and the robot working together will be able to quickly and comfortably perform tasks that are impossible (or much slower) for the person alone. This idea is not new, and there are already robots that cooperate with people in tasks where the person guides the robot through joint movements (such as carrying a heavy object together with the robot).

Domo is one of the first robots in which guidance is done through "social interaction" - the robot understands what is required of it by watching the human's movements and receiving verbal commands, as in the "play" we opened, and also by the human moving the robot's hand to the required place and leaving them there.

It is important to note that the social interaction is two-way: the person also receives messages from the robot, as at the point in the play where the robot extends its open hand towards the person. This is a clear and intuitive message that the robot is ready to receive an object, and the person already understands that he must place the object in the robot's hand.

This is an elegant solution to the difficulty that Dumo may encounter if he is required to pick up an object from the table: it is not always clear to the robot how to recognize the object or where to grasp it. This is how the intuitive social interaction deals with the limitations of the robot's independence, without harming its potential usefulness.

The second principle is relevance to the task: in many studies, the planning of robotic actions is carried out taking into account all the information the robot has about the state of the world around it.

Domo, on the other hand, is designed to concentrate solely on aspects of the environment relevant to the performance of its current task. As a general problem, deciding which aspects are relevant may be difficult, but Domo is built to operate in human environments, and here it is actually an advantage: the objects that Domo will be required to manipulate are the ones that are common in such environments, and they have some common characteristics, such as comfortable grip points.

Therefore Dumo can ignore the detailed structure of the object, and concentrate on the grip point and the edges of the object. These decisions made it easier for Edsinger and his team to succeed without special markings of the objects, while many other robots are required to specially mark "reference points" on the objects with which they come into contact (Domo also uses such markings for the shelf, but not for the everyday objects presented to him).

The third principle is "thinking with the help of the body": other robots try to completely decode the image captured by their cameras, and based on this decoding a careful planning of the movement of each of the joints ("shoulder", "elbow", "wrist" and "fingers" are only Some of Domo's joints, which provide him with 29 "degrees of freedom", in the language of engineers). Domo replaces some of these calculations with movements that provide it with additional information.

One of the important parts of Domo's design is elastic movement: resistance to movement causes it to stop, so the robot is not only safer but also receives information, through the elastic joints, about the strength of the resistance and its direction. Joints designed this way may be less accurate, but the visual tracking of hand movement makes up for it. This is the reason why the robot verifies, by touching the shelf, the existence and location of the shelf.

In fact, the robot can change the "stiffness" of its joints at any moment, so that they function as springs with variable softness. Dumo also lightly shakes any object that is presented to him, to test its weight and the dynamics of its movement.

The idea of "thinking while moving" is also expressed in other ways. For example, it is not always easy for the robot's vision system to accurately decode the position and position of the hand, in order to orient the hand and fingers. Instead of improving visual decoding, Edsinger's team chose to teach Domo a simple behavior: Domo moves his head so that he can see his hand at an angle where decoding is easier.

These behaviors, in which the action itself is used to control progress and produce information about the world, are reminiscent of the way humans act: humans do not analyze all available sensory information, plan all the steps of the action, and then carry out the plan; They begin to act, and the act itself provides additional information and the basis for further planning. This similarity is not accidental: according to Brooks, the similarity stems from the "physical background" approach in which behaviors are built from other behaviors, and which is the basis of human intelligence as well as robotic intelligence.

Are all these enough to build a robot that we would like to bring into our homes? at the moment, no. Although Domo and other robots of his generation are no longer limited to pre-defined and planned tasks, their range of capabilities is still too small. However, one can find on Domo's website (link at the end of the column) videos that describe, among other things, the chronological development of Domo's abilities. This development justifies optimism about the future.

Does Domo justify the other hopes of its designers, who see it as part of the research program towards intelligent and self-aware robots? Again, not at the moment, but it is interesting to note that people encountering a cog or domo for the first time often feel that they are facing a sentient being. This feeling is an illusion caused by deception - to some extent intentional - as a result of the human structure of the robot and the social behaviors programmed into it (for example, looking into the human's eyes and extending a hand).

According to philosophers such as Dennett, this illusion can be seen as clues about how we judge consciousness in others and even in ourselves. It seems to be from the same line of thought that CSAIL director Rodney Brooks says how he knew his robots had reached the ads: when his students would hesitate to turn the robots off at the end of the day.

links

"Living and breathing robots" domain website of CSAIL extension

The article "Elephants do not play chess"

Israel Binyamini works at ClickSoftware developing advanced optimization methods.

From the August issue of "Galileo"

4 תגובות

With a glass of silicon-enriched milk, you will come out robotic and not a robot..

It is important to remember to add a cup of Tnuva part rich in silicon

Peace be upon you.

You should contact Dr. Octopus at

Havachim 4, Kiryat Bialik, Israel.

He has lived there since retiring from the media and crime business.

The recipe generally includes 5 eggs and 4 bags of flour. As for exact details, I would prefer not to reveal them publicly.

I was glad to help.

Peace,

My name is Adi, I'm 7 years old and I'm interested in building robotic arms like Dr. Octopus from Spiderman.

I looked for a guide on the various internet sites and unfortunately I couldn't find it

The "recipe".

Can you help me?

Thanks in advance,

Adi