The Internet of Things, Big Data, are developments of the last few years that experience their ability to mathematically develop the field known as information theory, which was developed by Claude Schnon, who today celebrated his XNUMXth birthday

The Internet of Things, Big Data, are developments of the last few years that are experiencing their ability to mathematically develop a field known as information theory, which was developed by Claude Schnon, who today celebrated his XNUMXth birthday.

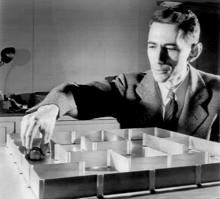

Claude Shannon (1916-2001), an American electrical engineer and mathematician is considered the founder of information theory and the one who laid the most solid mathematical foundations of literary communication. Immediately upon the publication of a leading article in 1948, he was recognized as one of the most brilliant scientists of the 20th century. Of all his contributions to the world of communication, the basic relationship between the rate of information, the width of the film and the signal-to-noise ratio, known as the Schnon-Hartli barrier and considered the central law of communication theory, stands out.

In 1940 he completed his doctoral thesis at MIT which dealt with mathematical models of genetics. He began teaching at the Institute for Advanced Study in Princeton, where he met the intellectual giants of the 20th century including Albert Einstein, Kurt Gedel, John von Neumann, Hermann Weyl and others.

The great scientific and technological efforts invested in World War II attracted him to join Bell Laboratories in 1942 and engage in advanced communication and encryption projects there. At Bell Laboratories he met his mathematician wife Elizabeth (Betty) and the two married in 1949 and raised three children.

At Bell Laboratories, he participated in the construction of encryption systems, the most famous of which was "Telephone X", a secret conversation mixer that connected Winston Churchill in London and Theodore Roosevelt in Washington and allowed them to coordinate the war moves in absolute discretion. For a certain period he worked with Alan Turing, who headed the group of English scientists who cracked the German Enigma cipher machine.

Despite this long list of achievements in mathematics and engineering, Shannon managed to avoid one of the common pitfalls of geniuses - taking himself too seriously. He was also a juggler and was seen riding the halls of Bell Labs on a unicycle, and invented a rocket-powered Frisbee, and a flame-shooting trumpet. The inventions made possible as a result of his ideas are seen everywhere today.

According to Prof. Ali Levin from Afka College of Engineering It is hard to understate the importance of Shannon's legacy. The thesis he wrote for his master's degree is still used today as the basis of digital electronic computing. As a cryptographer in the service of the US government during World War II, he developed the first unbreakable cipher. For fun, he played with electronic switches and one of his inventions - an electromechanical mouse named Thesus that can navigate a maze. Today it sounds like the beginning of artificial intelligence. His work in the field of electronic communication and signal processing earned him the title "Father of Information Theory" which led to revolutionary changes in data storage and transmission.

Crossing the border?

As mentioned in recent years, we are required to question whether we are approaching the limit of sanity. BIn 2013, Scientific American interviewed Marcus Hoffman, director of Bell Research Laboratories in Holmdel, New Jersey., now the research and development arm of Alcatel-Lucent.

Hoffman and his staff see the "information domain" as the way to the future - an approach that will make it possible to increase the Internet's capacity by increasing the network's "intelligence quotient".

"We know that nature sets us limits. There is a limited amount of information that can be transmitted in certain communication channels. This phenomenon is known as the "non-linear Shannon limit", and it tells us how far we can advance with the technologies we have. We are already very close to this limit, on the order of twice the size or so. In other words, when we double the current volume of traffic on the network, which is something that could happen in the next four or five years, we will reach Shannon's limit. We therefore have a fundamental barrier here. We cannot stretch this limit in any way, just as we cannot increase the speed of light. Therefore, we must work within these limits and still find ways to continue the necessary growth.

“Our smartphones, computers and other gadgets produce a large amount of raw data, which is sent to data centers for processing and storage. In the future, it will not be possible to handle all data across the globe by sending it to one centralized data center for processing. Instead, we may move to a model where decisions are made about data before it's uploaded to the web. For example, if we have a security camera at an airport, we will program it, or we will program a small server computer that controls several cameras, to perform facial recognition on the spot, based on a database stored in the camera or server, before uploading any information to the network."

A distributed network is the solution

"The amount of data is large and requires artificial intelligence, analytics and distributed computing. That's what she said Dr. Ruth Bergman, director of HPE's development center in Israel.

Bergman spoke at the conference "The Digital Summit - From Strategy to Implementation", the annual conference of the Bureau of Information Technologies in Israel, named after Shlomo Tiran, from the foundation of the Bureau of Information Systems Analysts in the production of people and computers.

Dr. Bergman and her group have been dealing with data analytics for 20 years, and their developments are designed to handle Big Data, so that it will be possible to gain insights from all the information that flows today from anywhere.

According to her, "there are three major trends: AI or cognitive computing, analytics - which used to be the occupation of people with doctorates in computer science and statistics and today it is found in every mobile application, and the Internet of Things. The large amount of information forces the developers to be smart and decentralize the systems - today there is a convergence of the developer and the algorithmist."

"In the future, the devices will be a little more intelligent, and a lot of the computing will move from the cloud to them," Bergman explains. "We have a point of view on all these trends in The Machine project".

Communicate with each other and with memory

"One of the things that must change is the bandwidth between the processor and storage. Today it is very slow, and there is talk of unifying the memory and storage in one unit. A technological development that could help would be a non-volatile memory that is faster than Flash, in fact like DRAM but much cheaper and more stable, so it could replace the hard disk - in fact we will have a memory as fast as DRAM with a volume like that of a disk."

Another gradual change, says Dr. Bergman, "is in the use of optical communication. Today, optical fibers are used for communication between remote computers, but we intend to introduce optical communication between the processor and the memory, so that we can access our information much faster. To this end, we developed a new computing architecture that moves us from the world of the entire cloud to distributed systems, and from processor-based computing to memory-based computing."

"The architecture unites all the memory into what appears to the processors as a single unit, and the processors can communicate with each other and with the memory" she explains. "It is about a huge memory of 300 terabytes that will be in the center, and the processors are all connected to it."

"This is especially important for machine learning, when the computer needs to learn a model and for this purpose it analyzes huge amounts of information. The problem is that there is a bottleneck - every time the processor has to update the memory with the new model. Shared memory will allow us to update the data much faster."

The cars will be able to drive themselves

As an example of a good use of this architecture, Dr. Bergman gives the recognition of patterns in image processing. "For example, classification - there is a tumor on the skin or there is not. The whole field of deep learning is not futuristic. We use it every day. For example in Google image search and driver assistance systems. Not long from now, the cars will be able to drive themselves based on this technology."

"Another advantage that the architecture will give is a graph representation of entities and the relationships between them," says Bergman. "Until today we used the tables that came as one group from the disc. In the graph you can jump from entity to entity through the connections without failing due to the absence of some data. The problem - a graph needs a lot of memory and only such a huge growth will make it possible to implement it. All social networks work this way. Facebook and LinkedIn constantly examine your social graph and use this information to tailor what you see. Google also makes a lot of money from looking at your search graph."

And finally, Bergman explained that the issues of information security and physical security will also benefit from this increase in memory. "The digital organization needs a new style of protection. The access of a firewall and protection from the outside to the organization is not enough. Users need to understand that in many cases hostile code penetrates with the help of employees in the organization. To discover suspicious patterns today thousands or tens of thousands of events are collected per day - we will have to move to hundreds of millions. Hundreds of terabytes will allow us to handle 10 million events per second and also keep the information in memory for weeks instead of days. The corporate network should also be represented as a graph, and it will be a large and constantly changing graph."

"In the era of the Internet of Things, we will not be able to bring all this enormous information to the center or to the cloud, and we will have to handle it in a distributed manner, as is enabled by the new architecture we are developing in the HPE labs," Bergman concluded.

;

One response

The most shocking course I did at university 🙂