On the meeting between particle physics and artificial intelligence

"We are looking at something that can be compared to the remains of a crashed plane, and are trying to reproduce the color of the pants of the passenger in seat A17," says Yonatan Shlomi, a PhD student in the group of Prof. Elam Gross from the Weizmann Institute of Science. Only the "plane" that Shlomi is referring to is the LHC particle accelerator at the CERN research center and the crash is actually a particle collision.

The particle accelerator in the axle is no stranger to Prof. Gross. Between the years 2013-2011 he headed the group that was responsible for the search for the Higgs boson in the ATLAS detector, one of the two groups that announced The discovery of the particle in July 2012. The discovery of the particle, known as the "divine particle", marked the solution to a decades-old physical puzzle - how do particles get mass? According to the model predicted by Peter Higgs in the 60s, this particle is responsible for the mechanism. But until 2012 the particle remained in the domain of the theoretical model, despite the multiple attempts to locate it. As mentioned, against all odds - the particle was discovered. The mass puzzle was solved, and in 2014 Prof. Gross returned from Switzerland. But what is left to do after you have taken part in the effort to solve one of the biggest puzzles in the world of physics, and the effort has matured?

The obvious way to advance in particle physics is to discover a new aspect that was not known before - the discovery of a new particle, for example. After the discovery of the divine particle, efforts were concentrated on the axis in an attempt to prove other theoretical models, such as supersymmetry - according to which every boson has a superfermionic partner. But since these efforts did not bear fruit, it became clear to Prof. Gross that the time had come to take a different path: the accuracy and improvement of the means of data analysis, with the aim of improving the extraction of existing data and the efficiency of the search for new particles in current and future accelerators. For this purpose, he founded a new research group at the institute, which aims to solve problems in particle physics using machine learning. Shlomi was the first student to join the group, and the task he undertook was to improve the data analysis of the ATLAS detector, which contains about 100 million components.

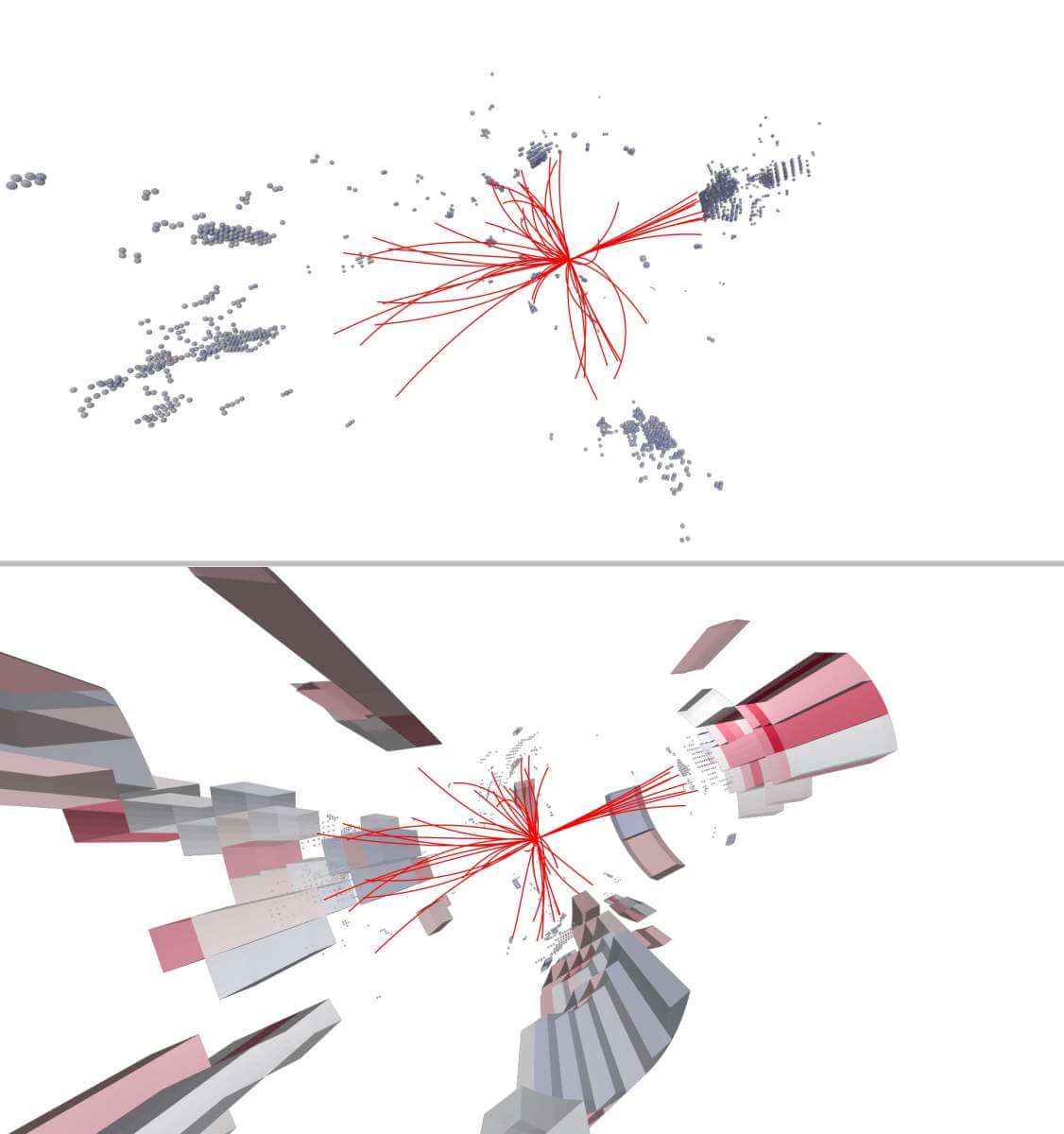

When particles collide inside the ATLAS detector, its components pick up energy measurements that scientists must decipher. Combined with the fact that there are a billion particle collisions every second that the particle accelerator is operating, a problem arises that must be attacked from two different directions: on the one hand, the amount of data obviously does not allow for manual decoding; And on the other hand, since these are fast events that occur on a microscopic scale, the number of components in the detector - no matter how large - is still not able to satisfactorily absorb all the events with a high level of accuracy.

The first task chosen by the members of the research group was to improve the ability to distinguish between different types of quarks - fundamental particles obtained as a result of particle collisions. There are six types of quarks and each type has a different mass - which determines the chance that the Higgs particle will decay into the particular type of quark as a result of a collision. For example, the probability of seeing the Higgs decay into a bottom quark is very high - since its mass is high - while the "up quark" and "down quark" are so light that they cannot be measured. But there is one quark - a magic quark - that is neither too heavy nor too light, so it is a serious challenge to identify it and distinguish it from a bottom quark.

For this purpose, the research group developed Particle flow algorithm (particle flow), which examines their movement pattern and energy distribution in space and time. By feeding billions of examples into particle collision simulations, the scientists were able to show that a computer could be taught to recognize and analyze the data. "We don't have the means to build more accurate detectors," says Prof. Gross, "but since we understand the physics behind the collisions, it is possible to produce high-resolution simulations of particle collisions to ask: how would the detector react if it had more components, and therefore also the ability measure the collisions more accurately?”

Now that Prof. Gross and his group members have succeeded in showing the feasibility of the algorithm they developed, the next step will be to examine it on a larger scale. "Currently the understanding is that in order to extract scientific value from the measurements made at the LHC, our sensitivity must be as high as possible," says Shlomi. "Precise simulations are the tool with which we can maximize sensitivity."

"I think the entire field of particle physics is going in the direction of artificial intelligence - at a level that no one will bother to point out that it is aided by machine learning, because it will be self-evident - part of the toolbox," says Prof. Gross. "We are not at that place yet, but when we get there - and soon - the field will change its face, and it will be a revolution in the science of data analysis in high energy physics."

More of the topic in Hayadan:

- The Israeli government is acting foolishly when it seriously harms higher education

- A device that will make it possible to answer one of the central questions in physics that remain unanswered

- The most accurate atomic clock will miss the end of the world by a second

- Driverless car - which car manufacturers are expected to enter the game first?

- The Israeli Space Agency was accepted as a member of the UN Committee on the Peaceful Uses of Outer Space