This is according to a report by Intel Laboratories. Itamar Levin, an Intel executive responsible for development, says that integrating laser transmitters into regular chips will save energy and improve processor performance, especially in applications such as artificial intelligence

Intel Labs recently announced (27/6/2022) for significant progress in its integrated photonics research - silicon photonics, which is seen as the next frontier in increasing the communication bandwidth between silicon computing in data centers and different networks.

The arena of data transfer within data centers at high speeds is competitive. Nvidia is constantly increasing the power of its GPU and connectivity - the NVLink and NVSwitch as well as the DIRECT technology in which optical communication also began to be integrated.

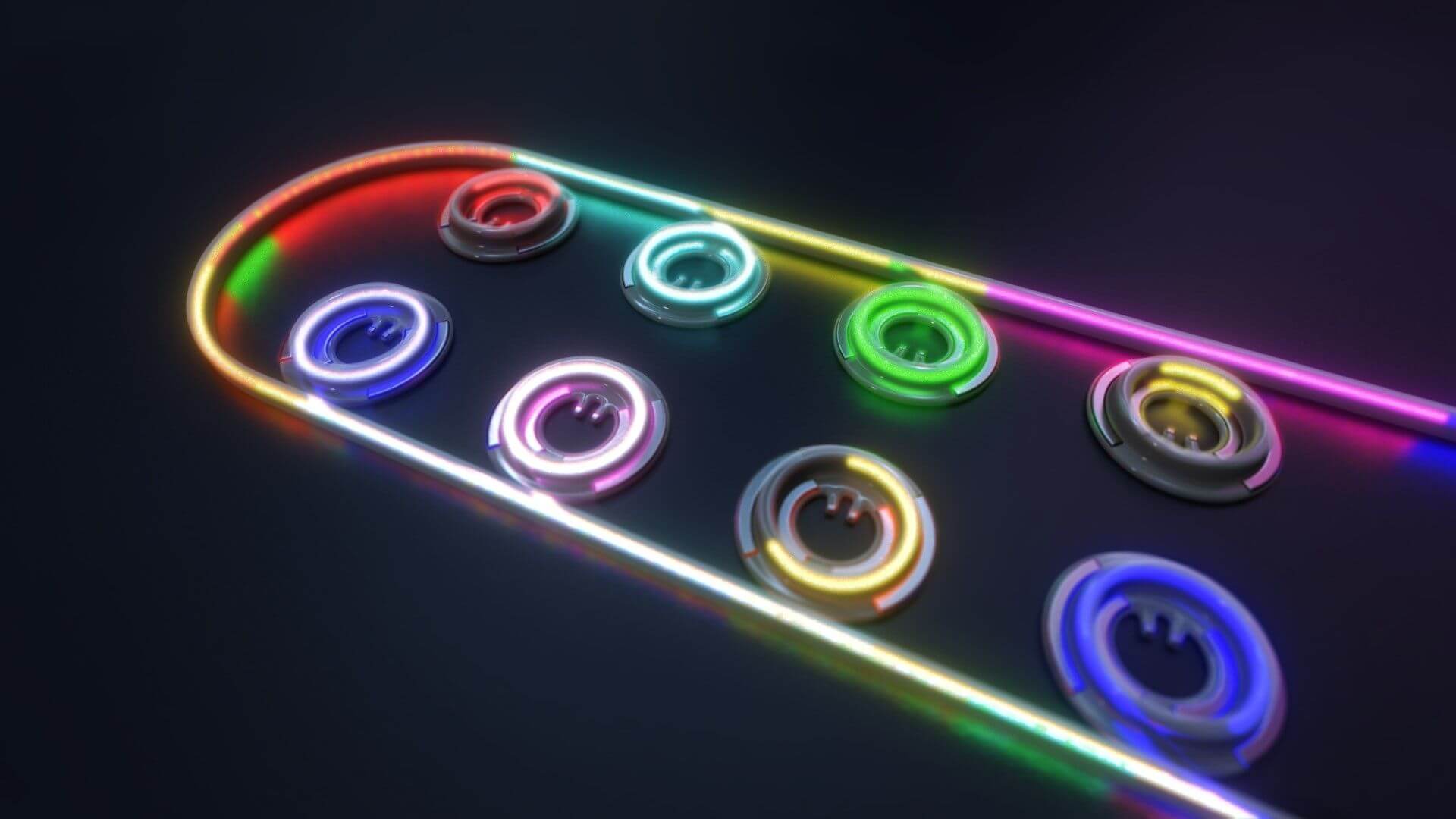

Intel's development that was revealed today and which is still in the laboratory stage takes a slightly different direction - the integration of silicon photonics up to the chip level with the possibility of producing it on regular chips instead of using external technologies and integrating it into the final product. The latest research includes industry advances in multi-wavelength integrated optics. This breakthrough will enable the optical source to deliver the performance required for future high-volume applications, such as co-packaged optics for emerging network-intensive workloads, including AI and machine learning (ML) and pave the way for high-volume manufacturing and wide deployment.

Itamar Levin, Intel Fellow in the chip design and development group explains that the latest research includes advances in multi-wavelength integrated optics. This breakthrough will enable the optical source to deliver the performance required for future high-volume applications, such as co-packaged optics for emerging network-intensive workloads, including AI and machine learning (ML) and pave the way for high-volume manufacturing and wide deployment.

Optical connections began to replace copper wires as early as the 80s due to the inherent high bandwidth of light transmission in optical fibers instead of electrical impulses transmitted through metal wires. Since then, the technology has become more efficient as components shrink in size and cost, leading to breakthroughs in recent years in the use of optical connections for networking solutions, typically in switches, data centers, and other high-performance computing environments. However, due to the need to have a large external light source, the revolution has not yet reached the level of the individual chips and the signals that come from huge distances in an optical fiber are converted into electrons, while drastically reducing the speed and greatly increasing the energy consumption.

"Silicon Photonics is one of the most interesting technologies on the market in regards to data transfer in the cloud and on supercomputers. Nvidia, Intel and other big players are competing for it. Everyone wants to find the technological solution that will result in the speed at which the data passes being the speed of light, and the means by which the information is transmitted on optical fibers that are mainly made of glass in various compositions and in a structure that allows the transmission of light inside the fiber with minimal loss of information."

According to Levin, "If you try to look at the big picture, the world of communication in data centers is divided into two technologies - in the lower areas of the data center close to the processors, the memory and the AI accelerators, the communication is via electric cables at rates of up to 224 gigabits per second, and as you go up to higher layers in the data center, the communication becomes to more and more optical.

If we look at a typical data center, there are the server bases inside the base are drawers where there are the servers that are connected to each other and to the switch at the top of the base with Ethernet-based electronic communication. The same switch that sits at the top of the plinth is connected to similar switches in other plinths to an avenue with optical communication and each avenue is connected to the hierarchy of the hall also optical communication.

Why didn't the optics penetrate down into the servers themselves?

There are three reasons for this, the first is cost. The cost comes from the second reason - most of the optical communication products that exist today in the data center are complex products, they are not produced using CMOS development technology on a single piece of silicon, but instead you see a lot of fibers, separate lasers and a lot of electronics. A complex product, the fiber infrastructures were also relatively expensive infrastructures and as you go deeper into the data center and closer to the processors, you need a lot of links. In the low layer it can reach millions of physical links. These difficulties have delayed the convergence of higher bandwidth communications to processors.

The third reason is that this structure caused a bottleneck that hinders the continued growth of data centers and their capacity. For example, it is not suitable for AI applications where you want to create groups of processors (clusters) that work together and share a common memory - here it is necessary to transfer a lot of data between the processors themselves and between them and the memory.

The Ethernet network was established for communication uses, but today it is becoming an integral component in the processing process of processor clusters. This is a new usage. Recognizing this bottleneck is forcing Intel and data center hardware and architecture manufacturers to rethink networking solutions.

And of course there is the issue of power. Generation after generation of data centers require more power to push the data from server to server. According to analysts' forecasts, this application will reach 20-30% of the entire data center consumption within five years and should also dissipate the heat.

You need to invest more in DSP to solve the communication problem and the channel becomes shorter because you are approaching the physical limits of copper wires. The cables also become a limitation - the weight of the copper, etc. And a data center can have tens of thousands of such databases.

Silicon photonics will solve all these problems. Silicon Photonics is a production technology of optical communication systems by means of chip design and chip manufacturing. The big promise is that we will be able to produce optical communication systems in a pub very similar to the one that produces CMOS chips and this allows us to produce in huge masses and allows us to reduce costs."

"In addition, another level of integration will be possible. If before you had to take optical components, lenses, etc. and connect them to the chips, the level of integration would be limited. When switching to Silicon Photonics, the block has been removed, you can take the optical receiver and transmitter and insert it as a chip near the CPU - and in the future even produce it on the same piece of silicon as the CPU. The technology operates at very low power and this integration allows us to lower the latency that exists in data centers. We will be able to work at rates a hundred times and even a thousand times faster than the situation today." Levin explains.

More of the topic in Hayadan:

- "Soon the connection between neighboring chips in the data center will be optical"

- IBM marks a goal: a practical quantum computer with hundreds of thousands of qubits in a few years

- CEO Ramon Spies: Our processors are the heart of Levini Edlis-Samson

- A new way to store information using DNA has been developed